Compliance teams are moving faster with AI assistance. The gains are real, but while its speed can help with repetitive tasks, it can also hide AI risk.

Before joining Resolver, I spent years in audit and compliance (SOX oversight 52-109) roles where proving who approved what wasn’t optional — just ask any auditor. Those lessons about traceability and ownership haven’t changed with AI. If anything, the stakes are higher.

The SEC recently launched an internal AI Task Force to explore how artificial intelligence can improve efficiency, oversight, and innovation across the agency. While the focus is currently on internal use, the move signals growing regulatory attention on AI’s role in financial decision-making. As agencies sharpen their own tools, regulated firms should expect new scrutiny on AI features in compliance software — especially when it influences reports, controls, or policy language.

That scrutiny will raise questions many teams can’t yet answer. AI-generated content often slips into production without a clear review path. A control gets drafted using an assistant. Someone reuses the language in a framework. Later, no one can show who approved it or where it came from. The tool worked, but the oversight didn’t.

Regulators now expect proof of how the content was reviewed, when, and by whom. Without that structure, risk and compliance teams are left defending decisions they didn’t track and can’t validate. The teams that build an AI governance structure early will gain both speed and credibility.

In this blog, I’ll walk through the policy-ready features GRC teams should look for in AI-powered risk and compliance automation. With the right structure, AI tools can speed up reviews and standardize documentation, two areas where many programs struggle most under audit. When reviewers aren’t aligned or decisions aren’t recorded the same way every time, it undermines confidence in the results. AI can help fix that, but only if governance is built in from the start.

What governance-ready AI actually means

AI features in GRC tools are already changing how teams handle compliance work. Large language models (LLMs) help draft controls, summarize regulations, and identify obligations faster. But speed alone doesn’t make these innovations compliant. In GRC, your AI tools need to leave a trail: clearly showing who reviewed it, which policy it linked to, and when it was cleared for use.

When we say governance-ready AI, we mean AI-powered tools built with oversight at the core of every workflow. Each output should create a record that shows who checked it, when approval happened, and what policy or regulation it connects to. Without a built-in approval path, AI becomes another blind spot, one that’s hard to explain during audit.

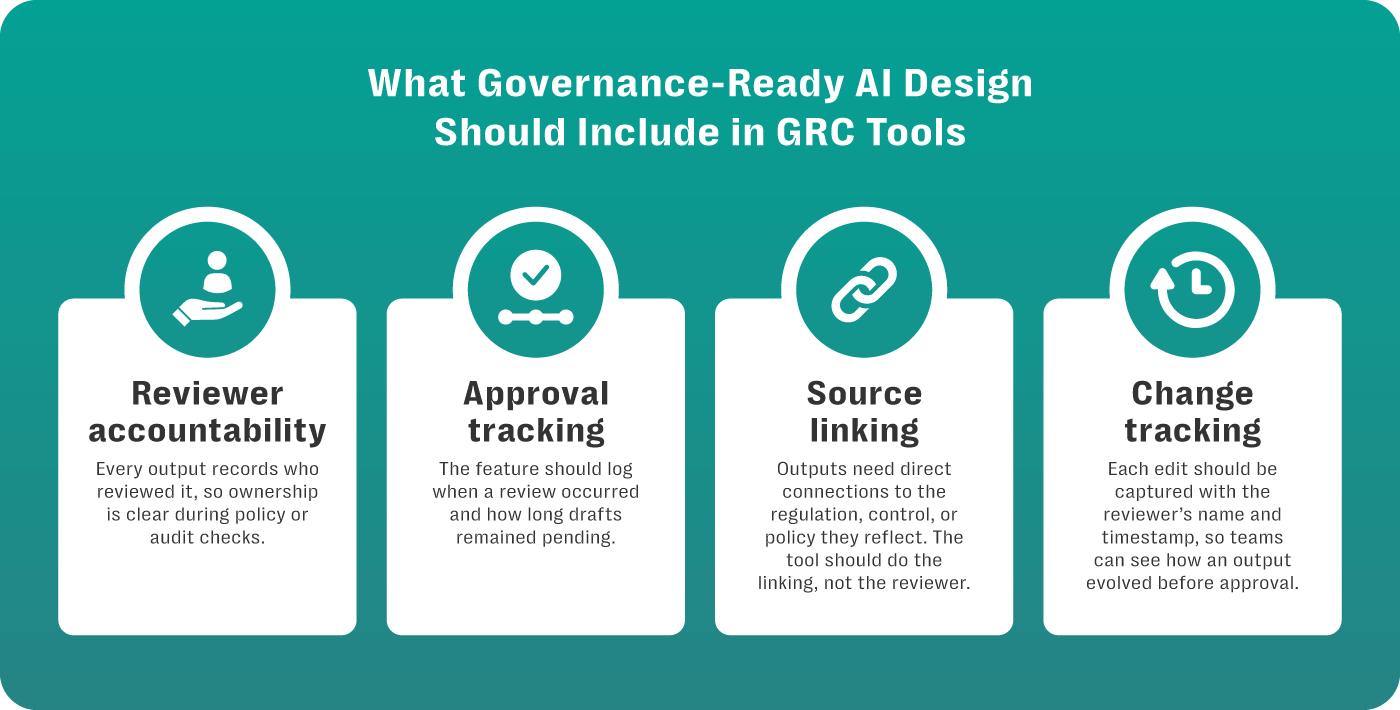

Strong AI design should include:

- Reviewer accountability: Every output records who reviewed it, so ownership is clear during policy or audit checks.

- Approval tracking: The feature should log when a review occurred and who reviewed it

- Source linking: Outputs need direct connections to the regulation, control, or policy they reflect. The tool can suggest connections, but GRC teams are the ones responsible for validating and finalizing them.

- Change tracking: Each edit should be captured with the reviewer’s name and timestamp, so teams can see how an output evolved before approval.

When these details are missing, oversight becomes reactive. Teams piece together information from messages and notes instead of relying on a single, authoritative record. Governance-ready AI features in compliance software make review, ownership, and traceability part of the workflow, not an afterthought.

3 Key audit-ready AI features in compliance software

Governance-ready AI not only helps GRC teams produce the content they need quickly, but it also needs to prove how that content was created, reviewed, and approved. For AI features to meet governance expectations, they need to reflect how compliance decisions are actually made.

Compliance teams with structured oversight don’t rely on reminders or after-the-fact validations. They use tools that embed oversight directly into their workflows.

1. Structured prompts with clear scope

Structured prompts make governance part of the workflow instead of something added after the fact. They’re designed around the task they’re meant to support—like summarizing a regulation, drafting a control, or pulling out a policy requirement. That task-specific structure limits variability, helps reduce hallucinations and inaccuracies, and makes responses easier to compare, validate, and reuse across similar decisions.

Instead of starting from an open-ended prompt, teams get consistency because the format reflects how they already work. That clarity also makes it easier for reviewers to understand what to look for and how to assess the result.

Scoped prompts take the guesswork out of how outputs are generated, so results can be traced and defended, if need be. By setting clear limits, GRC teams avoid compliance risks caused by creative or misleading AI responses. It also means reviewers spend less time checking quality and more time focusing on whether the output aligns with policy.

AI doesn’t decide, it drafts. Humans approve. It’s meant to support the process. Reviewers still own the outcome.

Structured prompts give teams a clear, repeatable way to review AI outputs and align them with internal policies. When approval frameworks are set upfront, audit and policy teams have something concrete to validate. Without traceability or formal review checkpoints, it becomes harder to defend how decisions were made—especially under regulatory pressure.

But clarity in the prompt only gets you part of the way there. Governance also depends on who reviews the output, when they review it, and whether that approval is captured in the system. That review step needs to happen inside the workflow. Not in an inbox, shared doc, or side conversation.

|

See Resolver’s Regulatory Compliance Management Solution in Action |

2. Role-based reviews

Scoped prompts help guide what AI generates, but they’re not enough. Reviews need to happen in-system, not in chats or buried in emails where they can’t be tracked down when it counts. Your AI-powered compliance software should be designed to be part of existing workflows with clear instructions on reviewing the information.

Role-based reviews help make ownership clear by showing who approved what, and when. Without that, it’s hard to track accountability or prove that the right steps were followed. Auditors expect to see documented approvals before use or release, and when checkpoints aren’t visible, reviews stall and delays pile up.

In-system reviews reflect how compliance teams already work. Reviewers have roles. Approvals happen in sequence. Good systems don’t disrupt that — they make it easier to capture and show.

3. Prompt, output, and revision history

You need a clear, accessible trail from the original AI prompt to the final approved output. One that shows every revision, comment, and reviewer along the way.

Automatic version history proves compliance under review. It’s not enough for teams to say, “We reviewed this.” They need to show how it was reviewed, what changed, and why. Having a full prompt-to-approval record eliminates doubt and protects teams from audit findings or regulatory pushback. It also strengthens collaboration, since multiple reviewers can see what’s changed in real time.

Without automatic version history, teams waste hours tracking down who changed what, and risk missing something critical. If version history lives in a backend log no one can access, it’s not governance — it’s just storage. A policy-ready system makes that history visible, readable, and reportable.

Prompt, output, and revision history lets you check how AI-generated content was used — or if it should’ve been used at all. Otherwise, reused outputs can slip through without context, and teams are left guessing what changed and why. That makes it hard to stand up to policy reviews or defend choices under scrutiny. Gaps like these don’t just slow things down, they lead to findings, rework, and missed deadlines.

Discover Resolver's solutions.

Resolver’s approach to incorporating AI in GRC software

AI should act like a junior team member: helpful, structured, and accountable. It supports the process but doesn’t make the decisions. Resolver’s Regulatory Compliance Management software is designed the same way: clear prompts, traceable actions, and built-in review steps that match how compliance teams already work.

Every action leaves a traceable record, from the initial AI prompt to the final approval. Oversight is part of the workflow, not an afterthought. That way, teams are confident that each output can be reviewed, defended, and passed through policy.

The right AI features don’t stop at content generation — they support the full compliance process.

|