It’s easy to tell when a GRC program is stuck.

Reports bounce from inbox to inbox. Each team works from its own version of the truth. Meetings turn into debates about which spreadsheet is right. No one’s sure who owns what anymore.

On top of that, risk, audit, and compliance all have different goals. Risk wants faster assessments. Audit wants stronger documentation. Compliance just wants a clean report. Each team collects its own data and builds its own version of the truth. What should be a single process turns into three separate ones.

That separation creates friction. Every request means another search through spreadsheets, drives, or email threads. People copy information instead of sharing it. Small mistakes multiply. Weeks disappear trying to confirm what’s already been done.

That’s what a siloed GRC program feels like: disjointed, reactive, hard to scale.

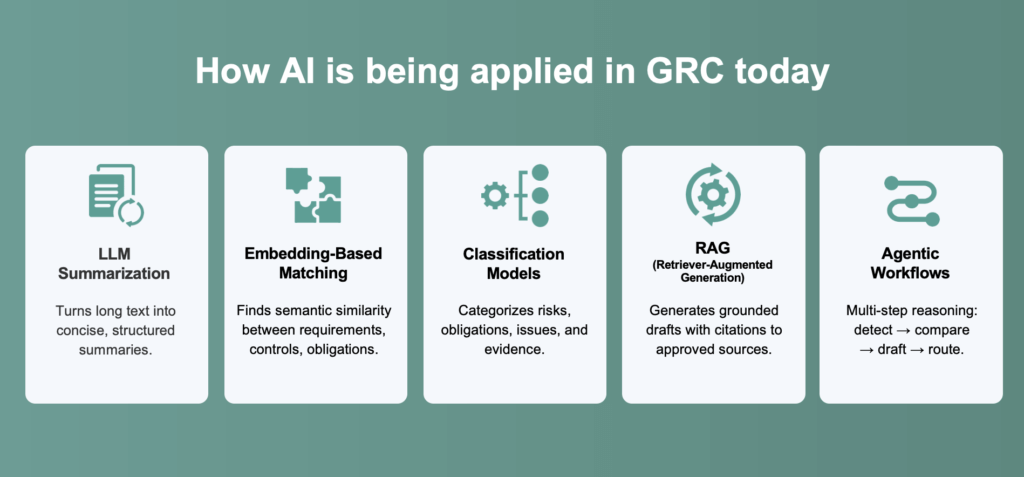

AI in GRC can help pull those pieces together. It connects policies, controls, and evidence so teams can see the same picture at once. Not by taking away human judgment, but by giving it better context.

If your GRC program feels fragmented, here are five signs it might be stuck in a silo, and what you can do about it.

Discover Resolver's solutions.

Sign #1: Manual, text-heavy processes are slowing you down

Most GRC work lives in documents. Policies, controls, audit reports, SOC summaries — they all sit in Word files or Excel spreadsheets. Every policy, control, and audit finding gets copied, saved, and reattached in a new format. Teams spend more time chasing information than using it.

It starts small: A policy update gets missed; someone forgets to update a related control; a report gets built on the wrong version of a spreadsheet. One mistake doesn’t seem like a big deal until an audit pulls every file into view. Suddenly, half the team is fixing links and double-checking data that should’ve been right the first time.

That’s a sign your program is stuck in a loop of manual effort. Every task depends on people remembering where things live and how they connect. There’s no shared structure to hold the work together. The risk here isn’t just wasted time, it’s that your records lose credibility when they’re out of sync.

Implementing AI-powered features in GRC platforms can give teams a consistent foundation. They can read policies, map related controls, and surface differences between frameworks. Instead of manually sorting through hundreds of files, reviewers can start with organized drafts and verify what matters.

This shift doesn’t remove oversight. It helps create one source of truth. When teams spend less time reformatting and reconciling, they can focus on judgment, accuracy, and alignment. That’s where real governance happens.

Sign #2: Risk, audit, & compliance don’t speak the same language

Ask any GRC leader what slows progress, and they’ll point to misalignment. One team focuses on likelihood and impact. Another cares about proof and traceability. And a third wants to see that everything was reviewed by the right person at the right time. Each group has its own priorities and systems to match.

A control might look strong to one team and incomplete to another. A finding that should be simple turns into a long thread of explanations and screenshots. Reports pile up because no one trusts the data from another department.

It’s not intentional. The work just evolved that way. Tools were adopted at different times. Templates changed with each leader. Teams got used to solving problems within their own walls. Over time, language drifted. Everyone’s talking about the same risk but describing it differently.

When that happens, collaboration starts to feel like translation. Meetings become about interpretation, not progress. People spend hours explaining how their process works instead of improving it.

AI can help bring consistency back into the work. When integrated into a GRC platform, it can surface existing controls and suggest where they apply across different requirements. Instead of each team reinventing the approach, they can reuse the same controls with confidence. That shared foundation helps teams stay aligned without forcing them into the same workflow.

When everyone sees the same structure, the conversation changes, and GRC starts to feel connected again.

Sign #3: Regulatory change feels like chaos

You’ve seen it happen: A new rule drops late on a Friday, and your inbox fills before lunch on Monday. Everyone’s scanning summaries, comparing notes, and trying to guess which part of the business it hits first. The volume feels endless, and the response becomes scattered.

Someone starts a tracker. Another team builds a slide deck. Compliance drafts a policy update. Risk opens a new register entry. Nothing lines up because everyone’s working from a different interpretation.

By the time each team is brought together, weeks have passed. Half the changes overlap, and some contradict older obligations. Everyone’s overworked, and no one’s sure which version is right. That’s not a symptom of a lack of process, it’s an indicator that the process doesn’t scale when the ground keeps shifting.

The teams that stay ahead do one thing differently: they centralize context. They know where each rule connects, what it impacts, and who owns the fix. GRC tools that organize those links — instead of adding another layer of tracking — make that possible.

When that context lives in one place, patterns start to appear. Overlaps between frameworks are easier to spot. Similar requirements surface faster. Teams stop reacting and start planning. Instead of three departments doing the same work in isolation, they can share the same source material and adjust once.

When properly integrated into your GRC platform, AI can link related policies and obligations across frameworks, making patterns easier to see. That way, regulatory change stops feeling like a crisis, and GRC starts feeling proactive again.

Sign #4: Innovation gets stuck in approval limbo

You’ve built a case for using AI in your GRC program, and the use case is clear. Then the approval process starts, and momentum slows.

The issue usually isn’t disagreement. It’s uncertainty. Leaders want to understand how AI will be used, what data it touches, and where human review fits. Without shared context, those questions pile up. The proposal stalls, not because it’s flawed, but because no one feels equipped to say yes.

Over time, that hesitation shapes behavior. Teams stop suggesting AI-driven improvements because approvals feel unpredictable. Conversations about modernization stay theoretical. The program keeps running the same way, even as pressure and workload increase.

Programs that move past this treat AI adoption as a governance exercise, not just a technology decision. They define what the AI can and cannot do. They document data use, review points, and ownership early. That clarity gives risk, audit, and compliance a common frame of reference.

When those guardrails are visible from the start, approval becomes part of the process instead of a barrier at the end. Teams can test AI in small, controlled ways, build trust through evidence, and expand usage with confidence.

Sign #5: Oversight happens after the fact

A siloed program doesn’t just create extra work — it hides the cause of it. When processes, reviews, and evidence live in separate systems, no one sees how decisions connect. Gaps stay invisible until they’ve already caused trouble.

Visibility isn’t about dashboards or data access. It’s about continuity, and knowing how one action affects the next. Integrated GRC programs track that movement in real time. Each update, comment, or review links back to the control or policy it touches. That connection creates a living record of accountability instead of a static archive.

The answer isn’t more reporting. You should first rethink how information moves. Audit shouldn’t have to reconstruct what compliance already verified. Risk shouldn’t need to recheck what’s been approved. When systems are connected, every function sees the same source material in the same moment.

Integrated GRC platforms help by removing the gaps between teams. When Risk, Compliance, and Audit work from the same controls, evidence, and data, links stay intact without manual effort. Instead of stitching information together across tools, teams see the same picture at the same time. That shared visibility keeps work aligned and reduces the need for constant follow-ups.

When that transparency exists, silos start to fade. People stop searching for proof and start using it.

How AI in GRC provides governance by design, not default

Each of these signs point to the same truth: Silos don’t break themselves. They need structure that connects people, data, and oversight in one place. Without that foundation, progress depends on memory instead of shared understanding.

Modern GRC solutions change that dynamic. When risk, compliance, and audit share the same platform, effort compounds instead of repeating. Data stays consistent. Teams see the same evidence, the same controls, and the same results, so decisions start to carry more weight.

With Resolver’s Integrated GRC software, Risk, Compliance, and Audit are unified in a single connected system, cutting duplication and surfacing the information that matters most. Teams work from a shared control library. Dashboards update in real time. Reviewers see how every change links to the bigger picture.

The result is faster collaboration, fewer blind spots, and oversight that builds confidence instead of slowing progress. Watch the on-demand replay of our webinar, “How to Build a Scalable, Integrated GRC Program with AI-Driven Insights,” to learn how leading teams are building connected systems that scale — and how Resolver helps make that possible.