Financial institutions, insurers, credit unions, and other regulated organizations enter 2026 with more pressure than capacity. AI in risk management now ties directly to regulatory expectations, and operational resilience rules are more prescriptive. Many GRC teams still depend on manual updates, disconnected tools, and reporting cycles that no longer match the speed of change.

Leaders want clearer insight. Regulators expect stronger evidence. Business lines adopt new AI tools faster than oversight can keep up. That leaves risk and compliance teams piecing together issues across spreadsheets, systems, and shared folders without a dependable source of truth.

That’s where GRC automation and AI governance play a critical role. These capabilities reduce repetitive work, keep controls aligned to new requirements, improve resilience across critical services and vendors, and support audit readiness with accurate data and faster reporting. They go beyond simply replacing experienced analysts by making their work more reliable.

We’ve outlined five strategies we see across banks, insurers, and other regulated industries. Each one helps GRC leaders build a modern, connected program that keeps pace with regulatory demands, advances in AI, and the operational risks that will define 2026.

Here’s where to focus as expectations rise.

Strategy 1: Expand operational resilience as a cross-enterprise capability

|

Operational resilience has moved beyond traditional business continuity management. It now defines how organizations maintain critical operations during disruptions, and more importantly, how they prove that capability under regulatory scrutiny. Regulators, investors, and customers expect clear evidence that you can operate through cyber events, supply chain failures and financial instability. Meeting those expectations requires more than documenting plans. It now demands real-time insights, scenario-based stress testing, and the ability to respond faster than the threat evolves.

To meet these requirements, financial institutions are beginning to incorporate AI into their resilience strategies. AI-powered platforms can surface emerging threats by analyzing risk indicators in real time, identify gaps in control coverage, and accelerate scenario testing by simulating disruptions across systems and third parties.

GRC leaders must build operational resilience into systems, teams, and third-party relationships — not just document plans. That means running live scenario tests, mapping critical vendor and service dependencies, and fixing weaknesses before they’re exposed by a real incident or flagged by regulators. AI supports this by automatically mapping dependencies and monitoring for changes that could signal future risk exposure.

Traditional GRC programs only offer a top-down approach, emphasizing the enforcement of strategy and compliance across the organization. But there’s a growing recognition that these models must evolve to support resilience-focused initiatives that are adaptive, data-driven, and integrated across teams. Using AI to consolidate and analyze data across functions enables faster, more informed decision-making, and stronger cross-enterprise resilience.

Organizations must now prove they can continue operating through disruptions, not just document response plans.

- DORA (Digital Operational Resilience Act) – EU: Requires financial institutions to test IT and cyber resilience. Companies must conduct regular stress tests, document risk scenarios, and report incidents to regulators. Non-compliance carries financial and operational risks, including regulatory penalties.

- FCA & Bank of England – UK: Requires business to set impact tolerances on the maximum disruption a company can withstand before harming customers or financial stability. Organizations must map dependencies, test for extreme scenarios, and demonstrate recovery plans to regulators.

- APRA CPS 230 – Australia: Requires financial institutions to assess third-party risks, operational resilience, and business continuity at the enterprise level. Companies must show they can recover from disruptions without exposing customers to financial or security risks.

Disruptions are happening more often and hitting harder. From trade restrictions to political instability and rising conflict, these changes are putting more pressure on GRC teams to respond quickly and adjust plans with less warning. AI supports this agility by automating early warning signals and helping teams prioritize action based on real-time risk exposure.

Learn how Resolver helps you stay up-to-date with global operational resilience regulations

Strategy 2: Use AI to transform risk and compliance in real time

|

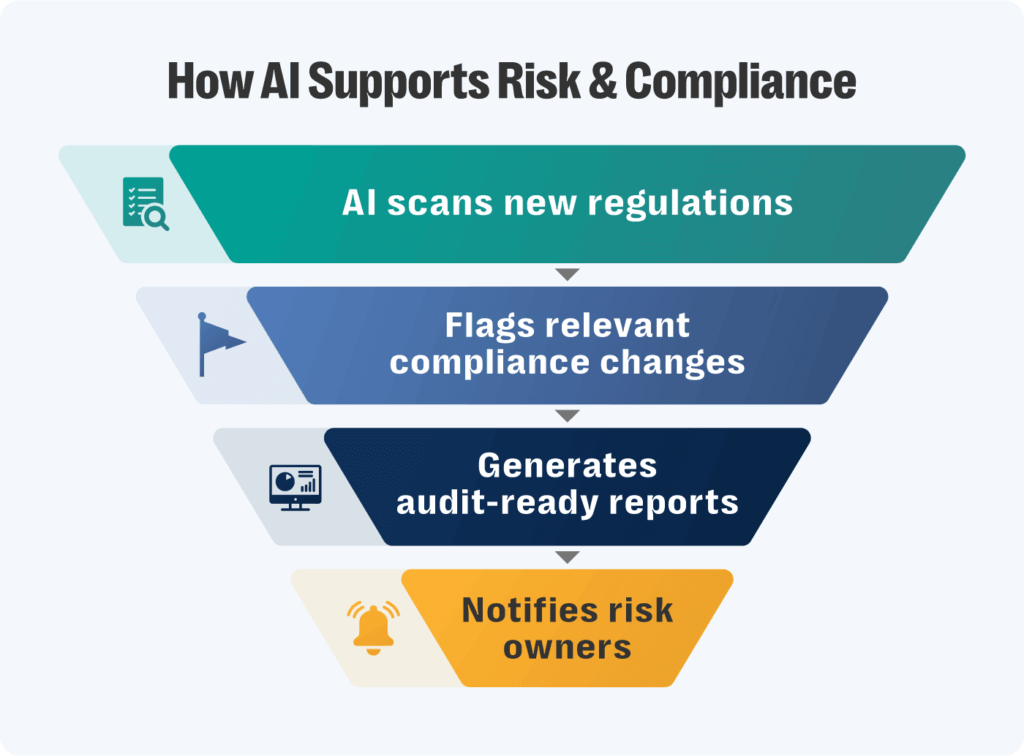

AI is fundamentally changing how GRC practitioners perform day-to-day risk and compliance activities. Traditional teams spend countless hours manually tracking regulatory changes, monitoring transactions, and producing reports after the fact. AI enables a shift from reactive compliance to continuous, real-time oversight by:

- Automating regulatory tracking: AI continuously scans new laws, regulations, and supervisory guidance, highlighting only the changes that impact the organization and reducing manual review cycles.

- Enhancing fraud detection: Machine learning models identify unusual patterns and emerging risks earlier than traditional rules-based approaches, helping prevent losses before they occur.

- Streamlining risk reporting: AI-generated reports consolidate data across systems and produce audit-ready documentation in minutes, improving accuracy while freeing teams to focus on judgment and decision-making.

As AI becomes embedded across business functions, regulators are increasingly setting expectations for how it’s governed within organizations. GRC teams play a critical role in establishing guardrails that allow innovation while maintaining control. This includes defining AI governance frameworks, ensuring regulatory and ethical compliance, monitoring model performance and drift, and maintaining clear accountability for AI-driven decisions.

Organizations that focus only on using AI to improve GRC efficiency, but fail to govern AI use enterprise-wide, will be exposed to regulatory, operational, and reputational risk. Those that address both dimensions can transform risk and compliance into a real-time capability: faster insight, stronger controls, and demonstrable trust as regulatory scrutiny continues to rise.

Strategy 3: Map your AI use to global regulations

|

AI in risk management is under growing scrutiny from regulators. GRC teams must now treat AI governance like financial compliance: track, audit, and justify decisions made by AI systems. The most effective way to prepare? Implementing a structured AI governance framework that ensures compliance, accountability, and risk mitigation.

Key AI regulations to be prepared for include:

- EU’s AI Act: Mandates full transparency for high-risk AI systems. Companies must establish governance committees to oversee AI usage.

- NIST AI Guidelines: Emphasize bias mitigation and AI security, requiring ongoing audits and performance reviews.

- Canada’s AIDA: Enforces risk-based AI governance, meaning companies must document AI decision-making and assess compliance gaps before enforcement begins.

For instance, you don’t need a physical presence in the EU to fall under their rules and regulations. If your AI tools touch EU data — whether that’s taking it in, using it, or sending it out — you’re still on the hook. Organizations that wait until enforcement begins will be at a disadvantage. Risk leaders should begin mapping their AI use cases to regulatory requirements now to avoid compliance gaps.

Also read: OSFI’s Guideline E-21 Ensuring Operational Resilience, Risk Management and Compliance

Strategy 4: Operationalize AI governance to future-proof your GRC strategy

|

AI governance is becoming a baseline expectation, not a nice-to-have. That means building a structured, enterprise-wide approach to managing AI risk, compliance, and accountability.

Adoption is going to be a journey, not a quick fix. And it’s going to require careful planning, risk management, clear strategy, integration throughout the organization and governance structures.

Regulators are moving beyond AI guidelines and enforcing strict compliance. To prepare, organizations should:

- Establish AI risk committees that oversee compliance, bias monitoring, and risk exposure.

- Implement enterprise-wide AI audits to ensure all AI-driven decisions are traceable and justifiable.

- Mapping AI use cases to regulatory frameworks, reducing compliance gaps before enforcement begins.

Building an effective AI strategy requires more than drafting a policy. It demands alignment with business goals, risk objectives, and clear governance structures.

Organizations that develop structured AI programs today will avoid last-minute compliance challenges and be positioned to lead.

Discover Resolver's solutions.

Turning GRC challenges into competitive advantages

AI-driven risk intelligence is redefining compliance, resilience, and governance. Organizations that move now will lead — not lag — as enforcement ramps up and disruption accelerates. Resolver’s Integrated GRC solution automates risk assessments and compliance tracking, helping organizations stay ahead of regulatory changes and emerging threats. Assurance-grade AI applies expert review, with human oversight on outputs. That keeps insights accurate, explainable, and safe for regulated decisions.

From obligations to controls, our platform maintains traceability as rules evolve. Automated workflows and reporting reduce follow-ups and speed execution. That allows risk leaders to see coverage, gaps, and progress instantly across frameworks.

See how Resolver simplifies AI governance, risk automation, and compliance tracking with our Integrated GRC software. Book a demo today.