Meta’s move to replace its trust and safety team with Community Notes marks an inflection point that significantly heightens compliance and reputational risks for brands. Similar to actions previously taken by X (formerly Twitter), the removal of a dedicated fact-checking service is highly likely to result in a sharp spike in user-generated content amplifying mis- and disinformation, conspiracy theories, spam, abuse and harassment across brand-owned and managed communities on social platforms.

In an age of community moderation and heightened compliance, as well as security and reputational risks online, Resolver’s human-led social listening and online risk intelligence services can help your brand navigate seamlessly through this rapidly shifting risk landscape and safeguard your brand’s online channels and brand reputation.

Shifting content moderation rules heighten brand vulnerability

Meta has implemented changes to its content moderation policy in an attempt to “depoliticize” platform moderation and to prohibit the perceived infringement on free-speech that is seen to have proliferated in the traditional form of moderation.

Some of the most consequential changes to Meta’s content moderation strategy include:

- Replacing fact-checkers with “Community Notes.”

- Reducing restrictions on discussions of sensitive topics like immigration and gender.

- Reintroducing political content into user feeds.

While the “Community Notes” feature is intended to empower users to tag misinformation collaboratively, the change in content moderation strategy has the potential to create notable compliance, reputational risks, and security risks across brand-owned and managed online communities. Three pressing risks arising from this change in approach towards content moderation include:

|

Increased mis- and disinformation: Community Notes’ goal to encourage users to classify content collaboratively increases the likelihood of errors or bias in accurately categorizing instances of mis- and disinformation on the platform. Content now has a risk of being improperly moderated and policed. For example, one mainstream platform saw a 74% increase in errors in accurately flagging posts sharing mis- and disinformation since the introduction of the feature. Addressing this mounting backlog of inaccurately classified posts has also contributed towards a delay in flagging or removing violative content. As a consequence, false and inflammatory rumors targeting brands or corporate leadership can persist online for longer, increasing the risk of such content spreading widely before correction.

|

|

|

Community bias: User-driven tools are vulnerable to community group biases, which can leave nuanced or minority viewpoints unaddressed. The decision of major platforms to change their restrictions on how people are allowed to discuss contentious social issues, such as gender or sexuality, has the potential to result in increased instances of spam, abuse and harassment targeting brands and senior leadership, both online and offline.

|

|

|

Brand vulnerability: The easing of moderation restrictions around sensitive issues such as gender, religion and politics can also lead to an increased likelihood of brands associated with harmful, divisive or politically charged content. In addition to leveraging brand visibility to increase the reach of inflammatory or controversial content, brand-owned and managed communities are likely to see an uptick in the amplification of false accusations of non-compliance or accusations of poor ESG performance. Together these threats can lead to heightened regulatory scrutiny while also significantly increasing the risk of long lasting reputational damage. |

Brands caught up in content moderation cross-fire

During major cultural and real-world events, people look to social media for information, guidance, and reassurance. Yet, without clear moderation of content related to real-world crises, this commentary can ultimately hinder more than help. Prominent examples of the complications arising from a reliance on user-generated content moderation include:

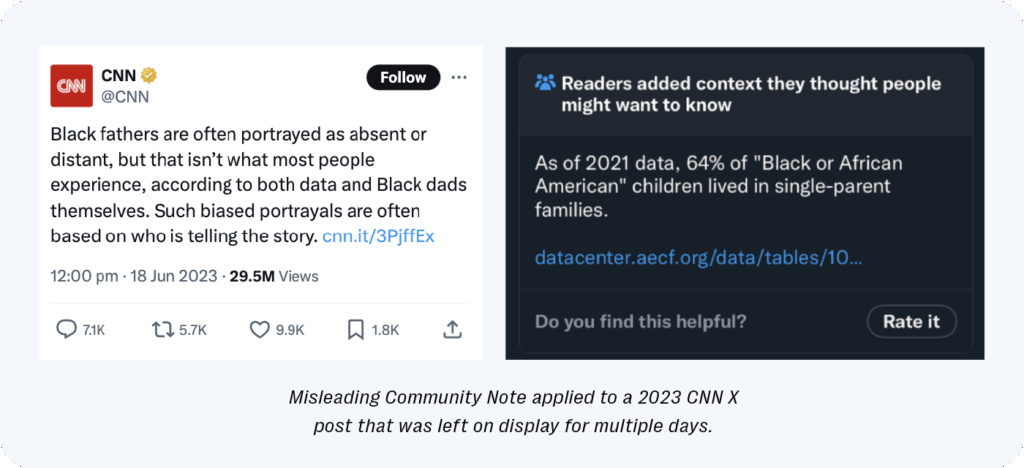

Misleading Information on CNN post

A CNN post from June 2023 that discussed biased portrayal of Black fathers had a Community Note attached that included misleading data, further perpetuating the racist and biased stereotype that the original CNN post was aiming to debunk. The Community Note remained visible for multiple days.

Such examples from “Community Notes” shows the dangers of crowdsourcing content moderation. Biased or politically motivated users have the ability to push their opinions onto posts with a large platform, where users are looking for accurate and truthful information. As a result, all brands and public-facing individuals have a risk of being flagged as sharing misinformation or politically leaning statements that platform users may not agree with, even when information shared is factually true.

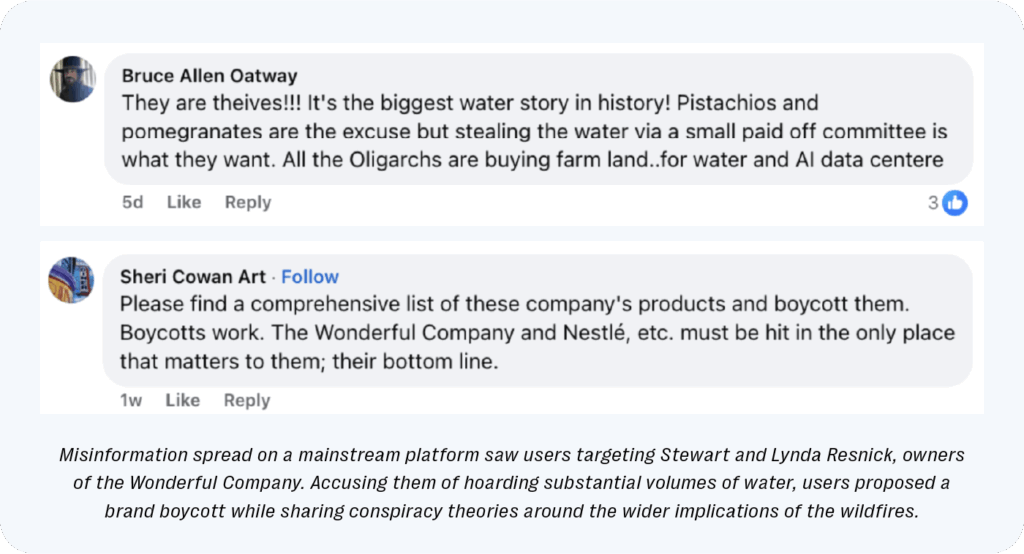

Misinformation During Los Angeles Wildfires

During the 2025 Los Angeles wildfires, numerous false claims surfaced on social media. Unfounded allegations around fire hydrant failures were shared across several mainstream platforms, alongside misinformation regarding a billionaire couple allegedly withholding crucial water reserves. This spread of misinformation complicated public understanding around the event, confusing responders and those trying to combat the situation.

Brands unrelated to the wildfires faced unwarranted criticism and boycott campaigns due to the spread of misinformation. The absence of moderation on social media platforms allowed false narratives to circulate rapidly, leading to unjustified public backlash. Corporate leadership remained unaware of the emerging risks to their brands until it was too late to manage or prevent the negative commentary.

Why social listening and online risk intelligence matters

The growing examples of false posts being mis-labelled or mis-contextualised through the Community Notes feature highlights the significant challenges businesses face due to misinformation across numerous social media platforms. While not always intended maliciously, incorrect information relating to an event, brand or business can spread quickly, emphasizing the need for robust content moderation and proactive social listening and crisis management strategies.

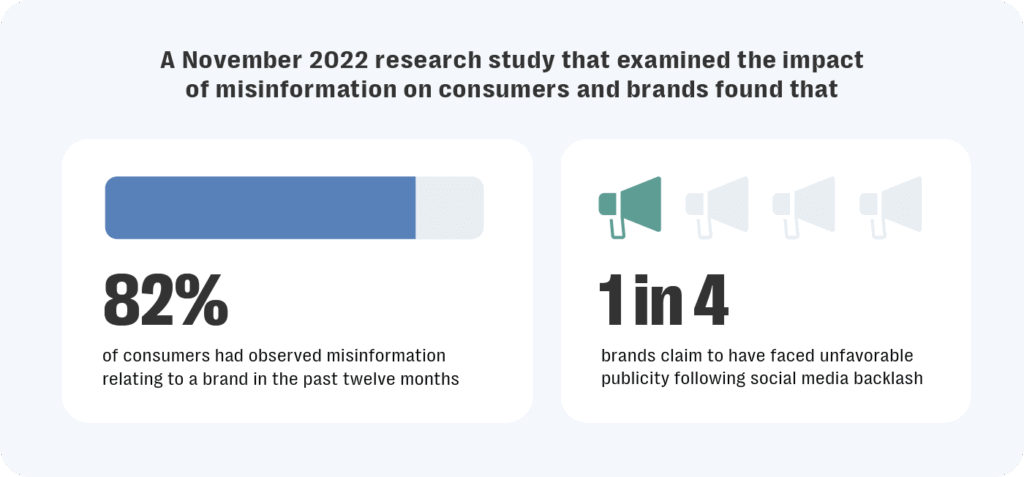

Inadequate moderation has real-world consequences. A November 2022 research study that examined the impact of misinformation on consumers and brands found that 82% of consumers had observed misinformation relating to a brand in the past twelve months, while 25% of brands claim to have faced unfavorable publicity following social media backlash. Effective moderation ensures that harmful, misleading, or offensive content shared on your owned social media pages will not tarnish your brand’s image.

The customer experience plays a vital role in this process. Misleading or inaccurate claims related to your brand or polarizing discourse on brand posts can lead to loss of trust, reduced customer loyalty, and significant financial setbacks. With platforms like Facebook holding 21% of the US digital ad market, brands cannot afford to neglect moderation or risk reputational harm in an era where digital interactions define perception.

Mitigating Risks in Real Time

Meta has admitted that the changes to their moderation will likely result in “catch[ing] less bad stuff.” Harmful online content such as misinformation, harassment, or extremism spreads quickly and can escalate rapidly without real-time intervention. Platforms with reduced moderation have seen hate speech increase by up to 50%, underscoring the need for immediate action in efforts to protect both brands and users.

Consistency and Trust

Audiences expect safe and engaging digital spaces. Professional moderation ensures that all interactions, content, and messaging on brand owned content remains consistent with the brand’s unique values and identity. With 73% of consumers claiming that they feel unfavorably toward brands that have been associated with misinformation, successful moderation is crucial to reinforce user trust and encourage brand loyalty..

Conclusion

Meta’s sudden changes to their moderation policies are expected to alter the digital landscape in both the short and long term. Brands now face unexpected challenges in protecting their reputation, both across their own content and in potential harmful user engagement. Resolver’s human-led social listening and online risk intelligence services not only offer tailored solutions, but provide peace of mind to ensure your brand will continue to thrive online.

Our scalable and fully managed service is designed to adapt to your unique organizational needs and includes 24/7 monitoring and instant notifications for high-priority risks ensuring that your crisis management team is always informed and ready to act on any critical developments.