When a national or global incident occurs, people often turn to social media to share their perspectives and have their voices heard. Similarly, many turn to these platforms to find and connect with like-minded voices who share similar values, personal experiences, and cultural backgrounds. As easily as this can foster connection and supportive communities, it can also lead to echo chambers that amplify hate and harm on social media platforms.

Divisive and harmful conversations are often kick-started by a small subset of accounts in these networks. This subset of accounts acts as not only the instigators of discourse, but the amplifier and ringleader of the wider network. In cases of online hate, these accounts play a key role in fueling and spreading malicious and harmful rhetorics.

Yet, what if we could use network intelligence to identify and isolate a handful of the most influential voices within a hate group or following a mass shooting? By targeting these accounts, we can dampen the spread of polarizing and toxic beliefs online without silencing the broader discourse.

Using network science to combat online harms

Resolver employs an in-house algorithm that identifies accounts that are central to harmful conversation and highlights the affected networks they influence on social media platforms. Platforms can then take targeted action on these accounts by removing comment sections on the content, hiding their posts, or even banning the user altogether.

This algorithm has been adapted from percolation theory for social media networks. In percolation theory, the percolation threshold is the point at which the network collapses. Resolver uses centrality algorithms from network science to find the most influential accounts.

After identifying the critical nodes within the network, our algorithm proceeds to systematically rank accounts based on their centrality score and risk, and remove them one by one from the network, measuring the amount of fragmentation at each stage. Our algorithm also identifies a similar threshold that removes only key actors introducing risk into the network that sufficiently fragments the harmful online chatter. The bottom line is to identify the smallest number of accounts that fragments or disrupts the network most effectively.

Resolver’s innovative application of network intelligence to assess the impact of malicious networks on social platforms was recognized in the International State of Safety Tech 2024 Report co-produced by Perspective Economics and Paladin Capital Group. The report drew attention to our use of percolation theory and blast-radius type approach as new and robust metrics for measuring return on investment within the online safety landscape.

Case Study 1: Disrupting anti-semitic networks

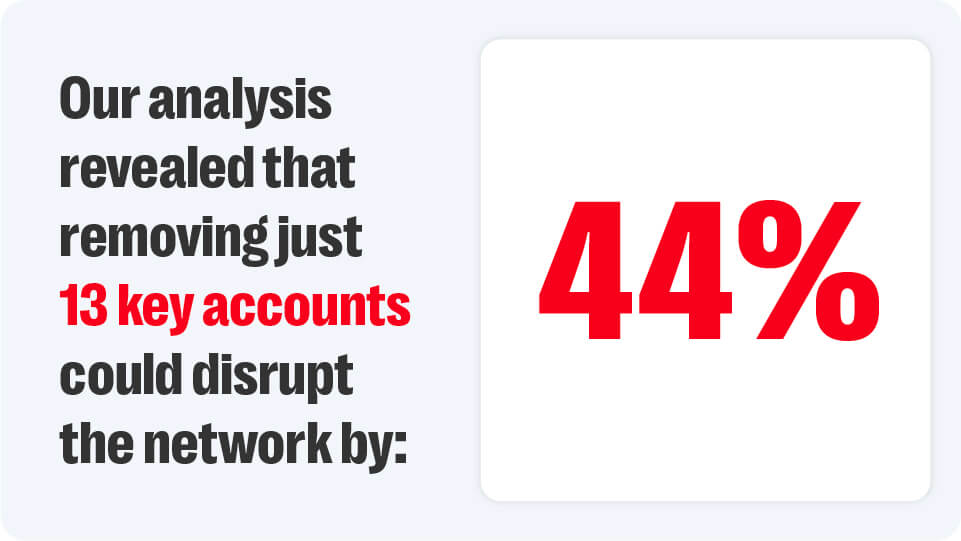

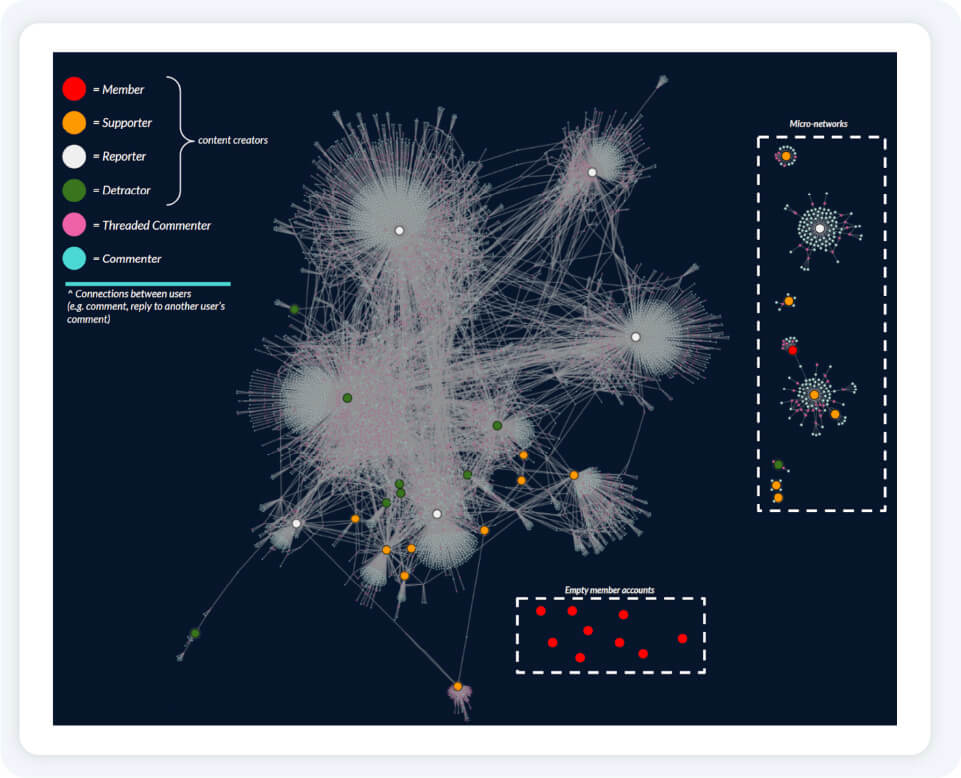

An anti-semetic hate group was active on a mainstream social media platform, and the platform sought Resolver’s help to better understand and manage their influence and scope. Our analysis revealed that removing just 13 key accounts could disrupt the network by 44%. The majority of these accounts were verified news channels that sparked and contributed to the spread of hateful discussions in their comment section. One course of action could be turning off comment sections for these videos to limit further amplification of the hate group’s rhetoric.

We also examined key accounts that weren’t verified reporters. There were 56 key accounts identified that made up about a quarter of the network. While the vast majority of these were commenters, there were several key nodes within the network representing a handful of group members, supporters and detractors that posted media that drove a bulk of the harmful commentary. Some of this content promoted narratives related to the “White Replacement Theory” conspiracy and information about an anti-semetic flyer campaign.

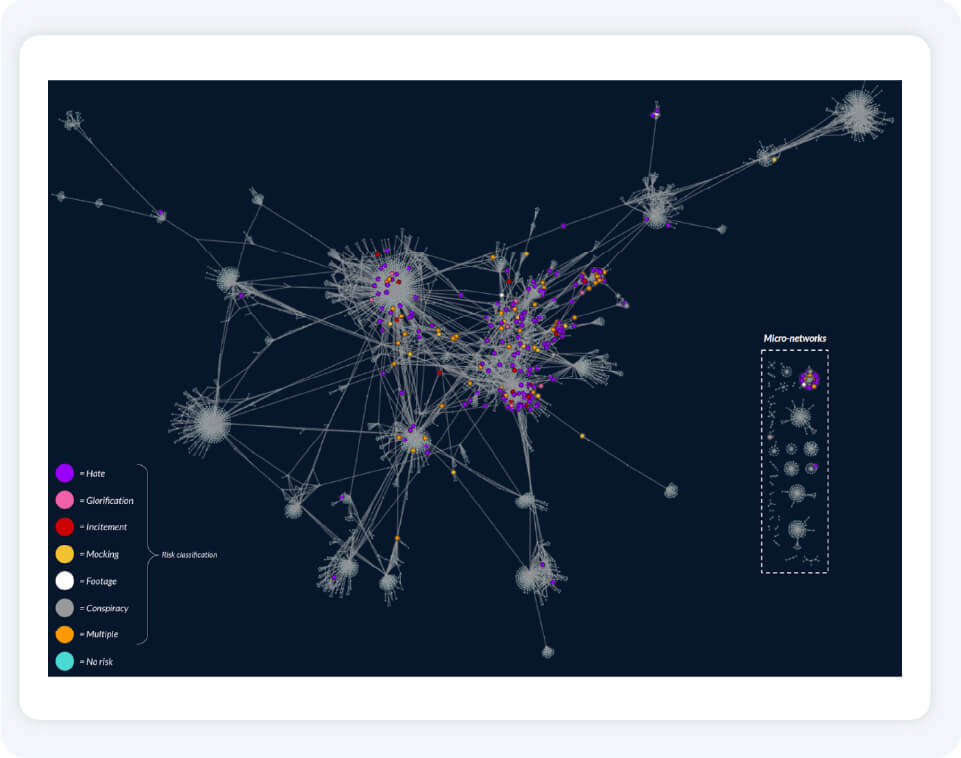

The network graph shows the distribution of the anti-semitic hate group on an online platform. Image produced by Resolver’s internal platform.

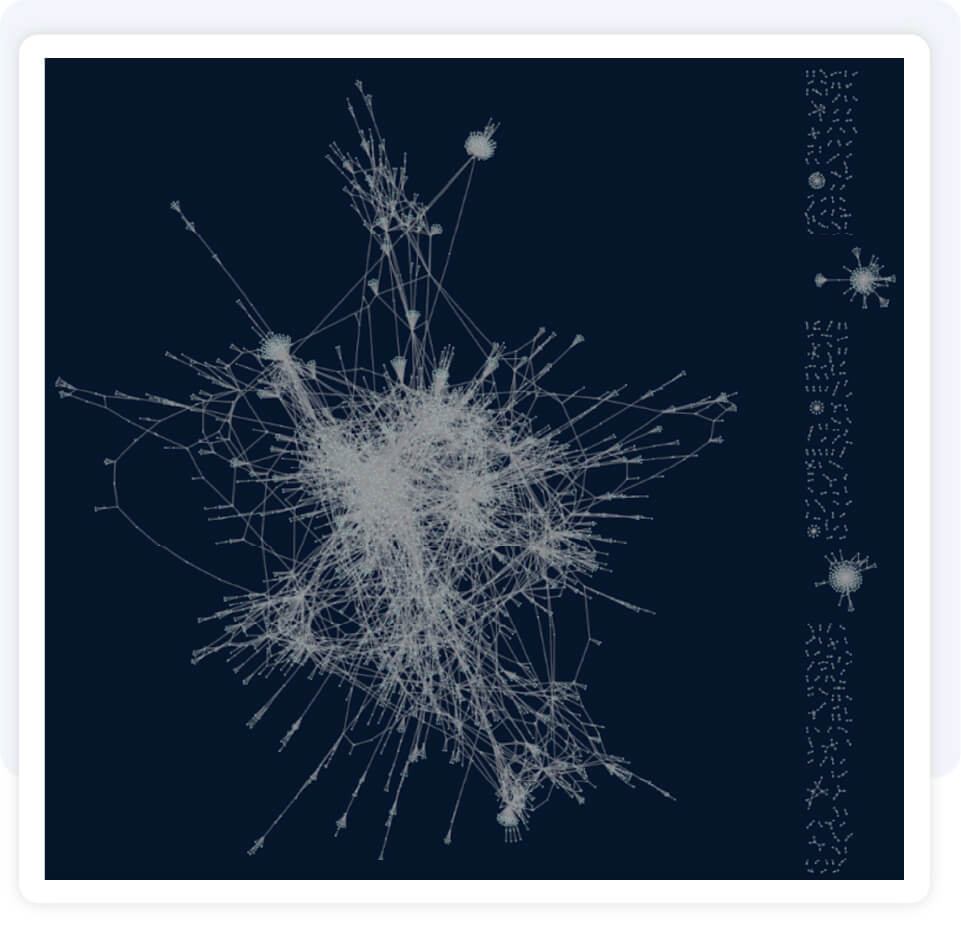

The image shows the fragmented network in the absence of the key voices. The network has been fragmented by over 40%. Images produced by Resolver’s internal platform.

Case Study 2 : Managing hate speech after a mass shooting attack

A tragic shooting at a religious meeting in Europe resulted in nearly 10 people fatalities and the same number were injured. Early engagement on a mainstream platform primarily revolved around content shared by news outlets. There were also isolated networks formed by members and supporters of the religious group involved. Hate speech thrived in the comment sections of these posts.

Commenters propagated many hateful narratives. One claimed the victims were gangstalkers, and therefore ‘deserved’ to die. Others spread anti-immigrant rhetoric, with one individual even suggesting that a “new Hitler” would be the solution. Some wrote that it was a false flag operation and a conspiracy by the government to increase gun regulations. A subset of these individuals cited “purim” to support their conspiracy theories.

The network of the incident on a mainstream platform 6 hours after the shooting occurred. The colors show the risk level of comments. The image was produced by Resolver’s internal platform.

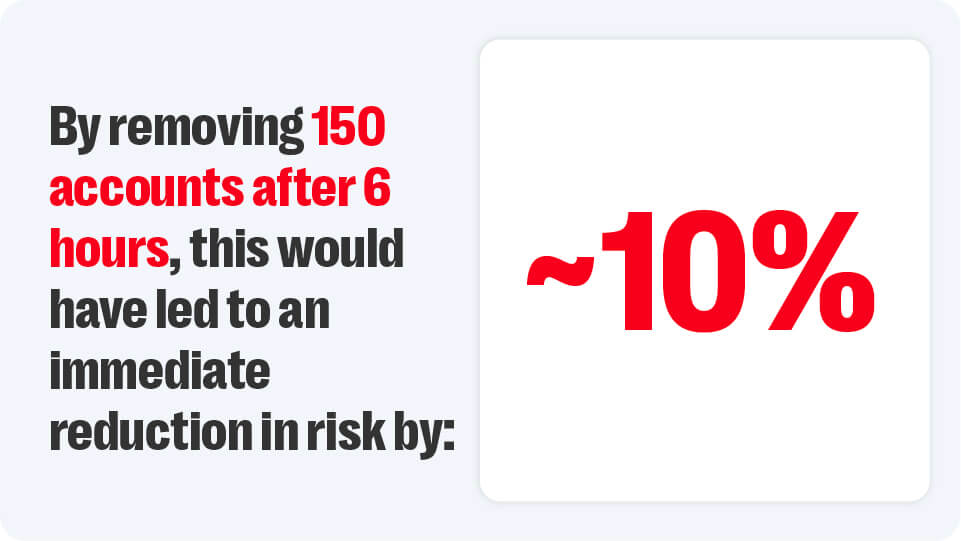

Our appraisal of the network found that removing 150 accounts after 6 hours of the incident would have lead to an immediate reduction in risk by ~10%. These accounts were non-verified accounts, rather than reputable accounts such as those belonging to verified news outlets.

After 12 hours of the incident, the activity of the harmful network had increased by 100%, leading to a further 50% increase in risk. Saliently, all of the accounts identified within the 6 hour time frame were still relevant, while the number of accounts required to decrease the efficacy of the network by 10% at this time frame had increased to 351 accounts.

In other words, a greater number of accounts would need to be actioned in order to achieve the same effect. This case study serves as a potent reminder of advantages of early detection and mitigation by the platform as a means of effectively tackling hate speech.

Break harmful networks and build online safety

Using Network Intelligence to take targeted actions gives control back to social media platforms. It arms platforms with the intelligence to quickly action only the key players of risk with respect to a particular incident or group in a way that aligns with their platform’s culture and policies.

Harmful networks are typically operated by, and revolve around, the rhetoric and ideology of one or more key players. These individuals radicalise and motivate more vulnerable users to adopt hateful views and engage in harmful activities. Membership in this network relies on both aligning with the group’s ideology and actively participating in such actions. As a consequence such accounts can be conceived of as both originators and lynchpins within the harmful network.

Taking targeted actions against such accounts allows a platform to reduce the presence of harmful content and disrupt the radicalising influence such individuals have in the echo chamber, mitigating the likelihood of further, more egregious activity by the malicious network.

From swift detection to targeted actions

By combining advanced AI detection, network analysis and behavioural intelligence drawn from a cross-disciplinary team of expert human analysts, Resolver helps platforms identify and disrupt harmful networks as an incident unfolds or a group evolves.

Our robust and fully-managed Trust and Safety Intelligence solutions augment the extant moderation capabilities of our partners and provide them with the sophistication and human expertise required to maintain platform integrity while remaining compliant with the latest online safety regulations such as the Digital Services Act and the Online Safety Bill.