Social media has woven itself into the fabric of our daily lives, offering connection, community, and creative outlets. Yet, beneath this positive surface lies a darker truth. At Resolver, we are dedicated to fostering safer online environments and empowering individuals, especially minors to navigate the complexities of the online world.

With a team of experts in detecting suicide and self harm (SSH) content and child safety issues, we provide vital insights and the necessary intelligence to understand and mitigate risks, particularly those related to self-harm and suicidal ideation on social media platforms. This blog post draws on our ongoing research and commitment to raising awareness about these critical issues, demonstrating how specialized intelligence offers a crucial advantage in this evolving landscape.

The amplification of content promoting self-harm and suicide across social media has risen in recent years. Meanwhile several significant incidents involving the deaths of teenagers in the US and UK, as well as new research and regulatory scrutiny has brought SSH risks to the forefront of public debate. It’s imperative to understand the evolving landscape of online risks, from the rise of “digital self-harm” to the role of algorithms and the persistent threat of cyberbullying.

Social media usage and suicide and self-harm risks

In June 2025, the BBC reported that the UK government was considering implementing time restrictions on children’s social media use, including a potential two-hour daily cap and a 10 p.m. curfew. This move, driven by mounting evidence linking excessive social media use to mental health issues in young people, underscores the urgent public debate around this problem.

Similarly, a recent report from researchers at Weill Cornell Medicine at Columbia University and University of California, which examined the impact of social media usage on suicidal ideation among children reinforced the concerning reality: minors who become addicted to social media use are at greater risk of suicidal thoughts and suicide attempts. These findings add to growing consensus in academic studies over the years highlighting the link between consumption of content promoting SSH on social media and real-world attempts to end one’s life. Understanding these connections requires deep analytical capabilities to pinpoint emerging threats and patterns effectively.

Below we outline some of the most prevalent forms of SSH risks encountered across the social media landscape.

“Digital Self Harm” is on the rise

“Digital Self Harm”, where individuals anonymously post or share hurtful content about themselves online is an increasingly egregious form of SSH online. While this form of self-harm can be misconstrued as bullying by another individual, in the case of digital self-harm the perpetrator and victim are, in fact, the same person.

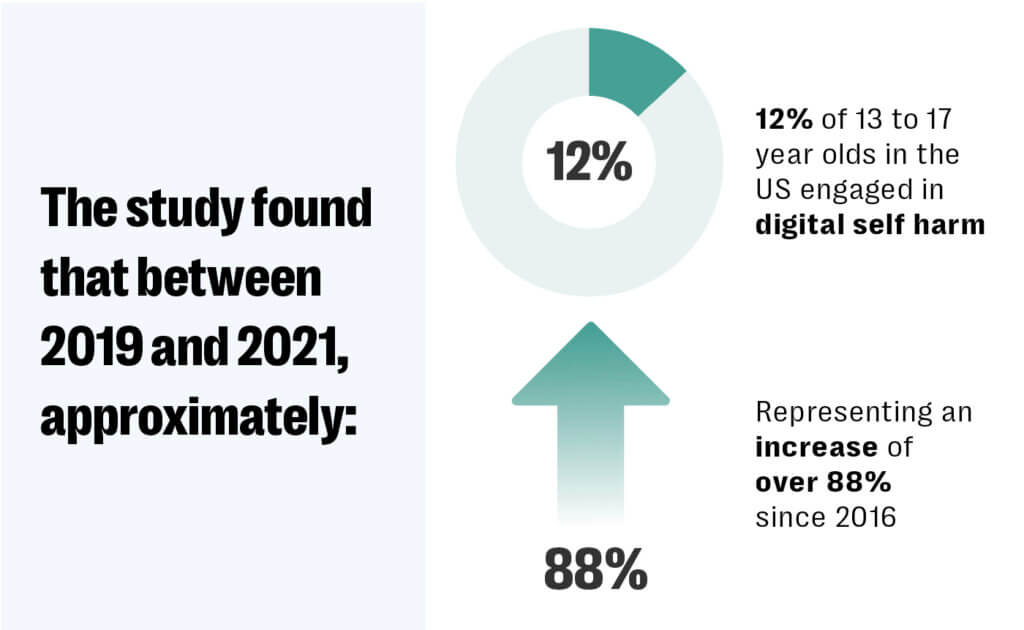

A 2024 study published in the Journal of Violence revealed a concerning surge in digital self harm practices among US teens. The study found that between 2019 and 2021, approximately 9 to 12% of 13 to 17 year olds in the US engaged in digital self harm, representing an increase of over 88% since 2016. Motivations for this behaviour vary from self-hate and attention seeking, to a desperate cry for help. The critical concern here is the strong association between digital self harm and traditional self harm, as well as suicidality.

Broader research into the extent of digital self harm practices by adolescent users is complicated by the anonymous and self-inflicted nature of the act. As a result, few individuals, particularly young people are likely to admit having engaged in such practices.

The devastating impact of cyberbullying

The persistent issue of cyberbullying remains a major contributor to self-harm and suicidal ideation. Particularly as such online harassment, often anonymous and relentless, can severely impact an individual’s self-esteem and mental wellbeing.

A January 2024 review of the available research into the effects of cyberbullying by the National Library of Medicine found that students are some of the worst affected victims, while other studies uncovered a strong correlation between being cyber bullied and increased rates of depression, low self-esteem, anti-social behavior and suicidal thoughts or attempts. The tragic death of Mikayla Raines, a UK-based animal rights campaigner following a sustained cyberbullying campaign underscores the real-world consequences of such online abuse and harassment.

Sustained cyberbullying, whether from one individual or a network, can quickly feel all-encompassing and claustrophobic, leaving victims with a feeling of helplessness and that the “whole world is against them.”

Algorithms and online echo chambers

Designed primarily to maximize engagement, social media algorithms are powerful systems that are incredibly effective at keeping us connected. However, these same recommendation systems also inadvertently contribute to some complex and unintended challenges, making the analysis of algorithmic impact more important than ever. Algorithms learn our preferences, then deliver more of what we’ve previously engaged with. While this can be fantastic for discovering new interests, it can also inadvertently reinforce existing viewpoints or interests, leading to the formation of digital “echo chambers” where diverse perspectives are less visible.

For individuals navigating mental health challenges, such algorithmic recommendation mechanisms can amplify exposure to content related to self-harm, depression, or even pro-suicide messaging – often without the user ever explicitly searching for it. Sophisticated intelligence monitoring can illuminate these algorithmic pathways, identifying how harmful content propagates and allowing for proactive measures disrupting its reach and enabling the safeguarding of users..

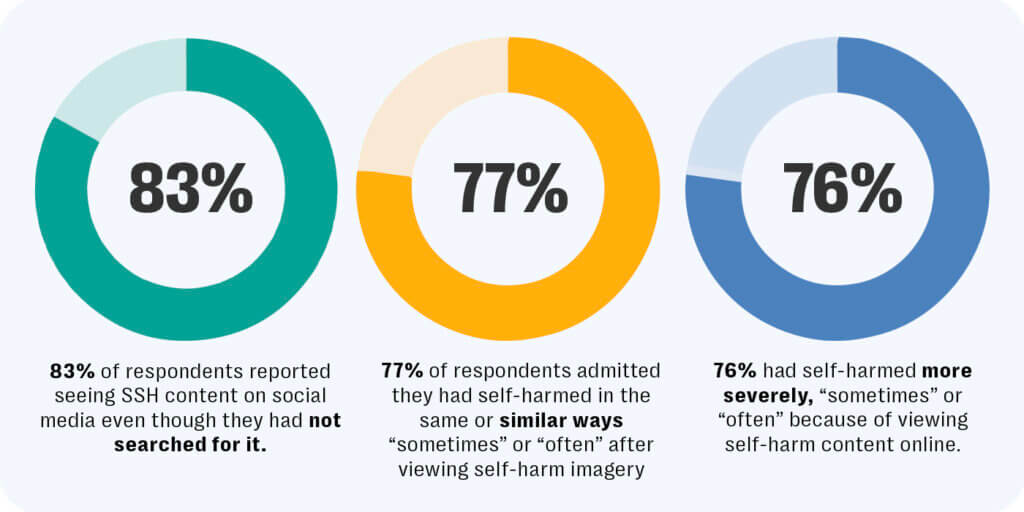

According to survey data examining how social media users experience SSH risks in the UK conducted by Good Samaritans in collaboration with Swansea University, around 83% of respondents reported seeing SSH content on social media even though they had not searched for it. Additionally, 77% of respondents admitted they had self-harmed in the same or similar ways “sometimes” or “often” after viewing self-harm imagery, while 76% had self-harmed more severely, “sometimes” or “often” because of viewing self-harm content online. This sustained, often algorithmically-driven exposure, even when not actively sought by the user, carries the very real risk of normalizing harmful behaviors and intensifying existing distress. It also serves as a powerful reminder that digital currents can sometimes pull us in directions we never intended.

Pro-suicide, self-harm and eating disorder communities

For individuals grappling with suicidal ideation or depression, social media can present a complex duality. On one hand, it offers an accessible avenue for connection and community, allowing vulnerable individuals to find others who share similar struggles and feel less isolated. Access to an online community of like-minded individuals can help foster a sense of belonging and provide platforms for sharing experiences and seeking emotional support. However, this potential for positive connection can also be accompanied by significant harms.

An environment in which self-harm or suicide are normalised can lead individuals to spiral further into distress. Exposure to unmoderated content about self-harm methods or pro-suicide narratives becomes commonplace, ultimately exacerbating their vulnerability to real-world harms. Community seeking behaviour within vulnerable individuals is on the rise; in particular, individuals grappling with eating disorders, following the resurgence of online “skinny influencer” trends.

For individuals grappling with eating disorders, the online world can become a source of intense connection. Often feeling isolated and misunderstood in their daily lives, they seek out communities where others share similar thoughts and struggles. These “pro-ana” or “pro-mia” communities offer a powerful, albeit distorted, sense of belonging and validation, making them feel less alone in their challenging experience.

Here, behaviours that are clinically dangerous are unfortunately normalized, even celebrated, reinforcing the eating disorder and making the path to recovery seem like a betrayal of this newfound understanding within the community. Beyond validation, these online environments actively fuel eating disorder tendencies. Members often exchange harmful tips and tricks for maintaining or worsening their condition, engaging in dangerous competitive cycles around weight loss or restriction.

The anonymity of the internet plays a crucial role, providing a shield that allows individuals to discuss highly personal and stigmatized behaviors without immediate fear of judgment or intervention. This reduced inhibition enables them to reinforce destructive patterns and, tragically, further entrench their eating disorder away from real-world support.

Harmful social media “challenges” and viral trends

The landscape of social media has also given rise to a disturbing phenomenon: dangerous challenges that tragically lead to severe injury or even death. These viral social media trends include perilous acts like the “Blackout Challenge” or “Chroming” where participants attempt to choke themselves until unconscious or inhale aerosol fumes.

For young people, the allure is multifaceted: a powerful mix of peer pressure, the intense desire for viral fame and social validation through likes and views, and a powerful drive for belonging to online communities. Coupled with the inherent risk-taking tendencies of adolescents and, often, a profound underestimation of the physical dangers involved, these challenges can spread like wildfire, drawing in vulnerable youth who might otherwise never contemplate such acts. The consequences are devastating, extending far beyond the immediate physical harm to include severe injuries, long-term health complications, and tragically, fatalities.

We are now seeing the profound impact of this, not just in individual family tragedies, but in a growing wave of legal action. Parents of victims are increasingly filing lawsuits against social media platforms who hosted those social media challenges. These legal challenges contend that platform algorithms actively promote and push these dangerous challenges into the feeds of vulnerable young users, directly contributing to their participation and the resulting harm or death.

How can we tackle SSH risks on social media?

Addressing these issues effectively requires a truly holistic approach, particularly at the user level. While technological solutions are undeniably vital, vulnerable users greatly benefit from a more human-centered approach to ensuring their well-being online. Ultimately, ensuring accessible and age-appropriate support resources for those who are struggling remains an essential component of fostering truly safer online environments. Expert-led intelligence can help platforms and technology service providers identify and mitigate nuanced, subjective and insidious SSH-related harm on their platform in a way that technology alone cannot.

The world of social media holds immense power for good, offering communities and support systems. However, as the trends around self-harm and suicide on social media continue to evolve, it’s a reminder that vigilance, collaboration, and a commitment to user safety must be at the forefront of the online experience. Speak to Resolver Trust and Safety today to find out how we can help your platform or technology service keep your users safe and prevent your online spaces becoming a haven for SSH.

If you or someone you know is struggling with thoughts of self-harm or suicide, please reach out for help. You are not alone.

- Samaritans: Call 116 123 (UK & ROI) – available 24/7, free, and confidential.

- PAPYRUS HOPELINE247: Call 0800 068 4141, text 07860039967, or email pat@papyrus-uk.org – for young people under 35 experiencing suicidal thoughts.

- Mind: Visit mind.org.uk for information and support.