In May 2023, a fake image of the Pentagon on fire briefly spread across social media. Within four minutes, the Dow dropped 85 points. The image was AI-generated. The market didn’t wait for a fact-check.

That moment exposed a broader risk: AI-generated misinformation moves faster than truth and puts financial markets, brand reputations and public trust at risk. False claims now flow from fringe forums into algorithm-driven feeds. They surface on mainstream platforms before brand, communications or risk teams even know they exist — sometimes before your first morning coffee.

Digital threat analysts are tracking a sharp rise in misinformation and disinformation campaigns aimed at regulated industries: finance, pharma, energy and infrastructure. These aren’t isolated incidents. They’re deliberate efforts to provoke controversy, distort public narratives or damage reputations. One day, it’s a misread ESG disclosure. The next, a fake press release with your brand name attached. These attacks are recurring, and growing more sophisticated.

Most social listening platforms track volume and sentiment. But they aren’t built to assess nuance, source credibility or coordinated behavior. That’s where threats slip through. Staying ahead means combining real-time detection with expert human review. Context turns a minor alert into a meaningful risk signal — and, ideally, a fast response.

Read on to see why contextual online risk intelligence is becoming essential infrastructure for compliance, security and communications teams.

The alarming scale of AI-generated misinformation

Misinformation and disinformation have been part of the public discourse for centuries. As far back as the first century B.C., Julius Caesar’s heir, Octavian, and his rival, Mark Antony, used coins, poetry and speeches to influence military and public opinion.

But in recent years, the rise of Generative AI has fundamentally changed these dynamics. Misinformation and disinformation is now cheaper, faster, and easier to produce — thanks to synthetic AI-generated text, audio, video and images. This AI-generated content can then be deployed to manipulate perception, discredit brands or manufacture backlash related to a company’s governance, operations or compliance.

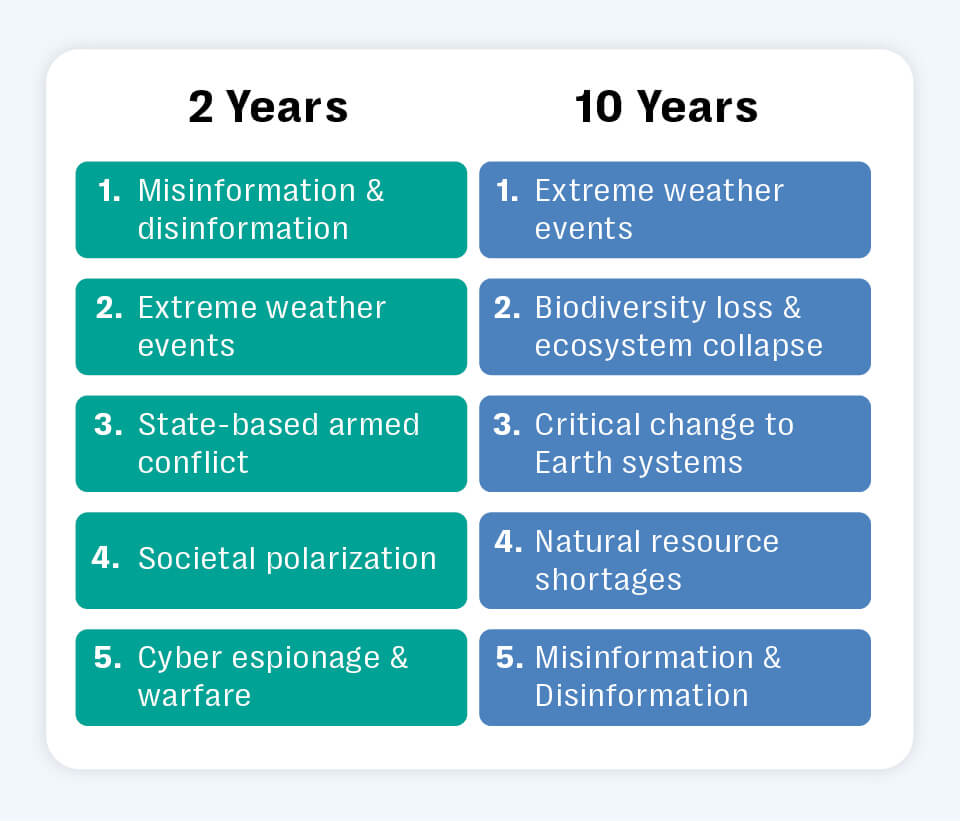

The scale of the threat is no longer speculative. For the second year in a row, the World Economic Global Risk Report 2025 listed mis-and disinformation as a top-ranked short-and medium-term concern across all risk categories. The report also highlighted how mis-and disinformation can “interact with and be exacerbated by other technological factors, particularly the rise of AI-generated content.”

Mis-and disinformation featured in both the top five short-term and long-term risks listed in the World Economic Forum Global Risk Report 2025.

Real-world scenarios driven by AI-generated misinformation

Experts believe we have now entered an era where a single fake video or synthetic image is capable of wiping millions of a brand’s market value in minutes. Other possible real-world examples of this threat include:

|

Executive impersonation attacks that depict leaders making false or inflammatory statements about company policies, ESG practices or financial disclosures. |

|

|

Fabricated press releases, seeded in AI-generated news feeds or fringe forums, that spread across mainstream platforms before verification. |

|

|

Resurfaced controversies, amplified by AI tools that strip away context and distort historical facts. |

Harvard Law School’s May 2025 review of the business risks caused by AI-generated mis-and disinformation concluded that the spread of such synthetic content has already caused “significant risks, including financial fraud or reputational damage.” The report also warned how “regulation meant to prevent the spread of disinformation may also expose companies to compliance risk.”

The brand reputation risks from AI-generated misinformation

The sophistication and scale of AI-generated mis-and disinformation creates an entirely new threat landscape for modern businesses. Bad actors can now leverage the tactics, techniques and procedures associated with state-level disinformation operations to promote false and incendiary allegations targeting major brands.

Reflecting the growing risk to businesses, the U.S Cybersecurity & Infrastructure Security Agency identified “creating deepfakes and synthetic media” as one of the eight most commonly employed tactics to spread false and inflammatory narratives online.

In December 2024, The Guardian reported on how false claims regarding the health risks posed by new feed additives used by a Danish-Swedish company to reduce the methane output in cows rapidly escalated into a business-critical risk.

Recently, a prominent German pharmaceutical brand was forced to publish a fact-check on their website after facing persistent false allegations linking the company to Agent Orange production. These enterprise-wide risks extend far beyond traditional public relations concerns, affecting multiple departments and operational functions with adverse financial and regulatory consequences.

| Compliance and regulatory risk: ESG misstatements triggered by false narratives may prompt regulatory reviews or result in compliance violations. | |

| Security and Investigations: Executive impersonation attacks through deepfake technology poses direct security threats. Financial fraud through synthetic voices and deepfake videos has convinced employees to transfer substantial sums to fake accounts. | |

| Financial risk: Regulatory fines stemming from ESG misinformation, reputation damage leading to consumer boycotts, stock price volatility from executive impersonation or false disclosures, and operational strain from customer service teams overwhelmed with misinformation-driven inquiries. |

Human-in-the-loop: why smarter risk intelligence starts with context

Most media monitoring and social listening platforms were built for a pre-AI world. They rely on keyword matching, sentiment scoring, and volume-based alerts — tools that work well for tracking brand and media mentions, but often fall short when threats are subtle, coordinated or strategic. Used alone, these systems often miss:

- Nuance and intent

- Source credibility

- Signs of coordinated manipulation

High-volume chatter overwhelms teams with noise. Meanwhile, low-volume threats — the kind that spark real damage — go unnoticed. Most importantly, these limitations can make it challenging for busy teams to distinguish a credible threat from a routine complaint or trending topic.

To stay ahead, organizations need more than alerts. They need context: The ability to validate what matters, filter out what doesn’t, and prioritize the risks that demand a response.

Resolver’s Social Listening and Online Risk Intelligence Service combines advanced, multilingual detection across the surface, deep and dark web with expert human validation. Our analysts provide the contextual insight and prioritization that automation alone can’t deliver—surfacing credible risks early, even from fringe platforms and niche forums.

This human-in-the-loop AI approach transforms raw signal into real-time, executive-ready intelligence. When AI-generated misinformation evolves by the hour, speed, credibility and context — not alert volume — define your ability to respond.

As generative threats evolve, the gap between signal detection and risk response will define which brands stay ahead — and which get caught off guard. Those that augment their traditional social listening approaches with comprehensive social listening and online risk intelligence designed to filter through the high-volume noise, prioritize credible threats, and provide forward-looking insights crafted to help their executives act with confidence.