The recent dismantling of Kidflix, one of the largest globally known child sexual abuse platforms online, has exposed, yet again, the scale and severity of child expolitation that occurs online.

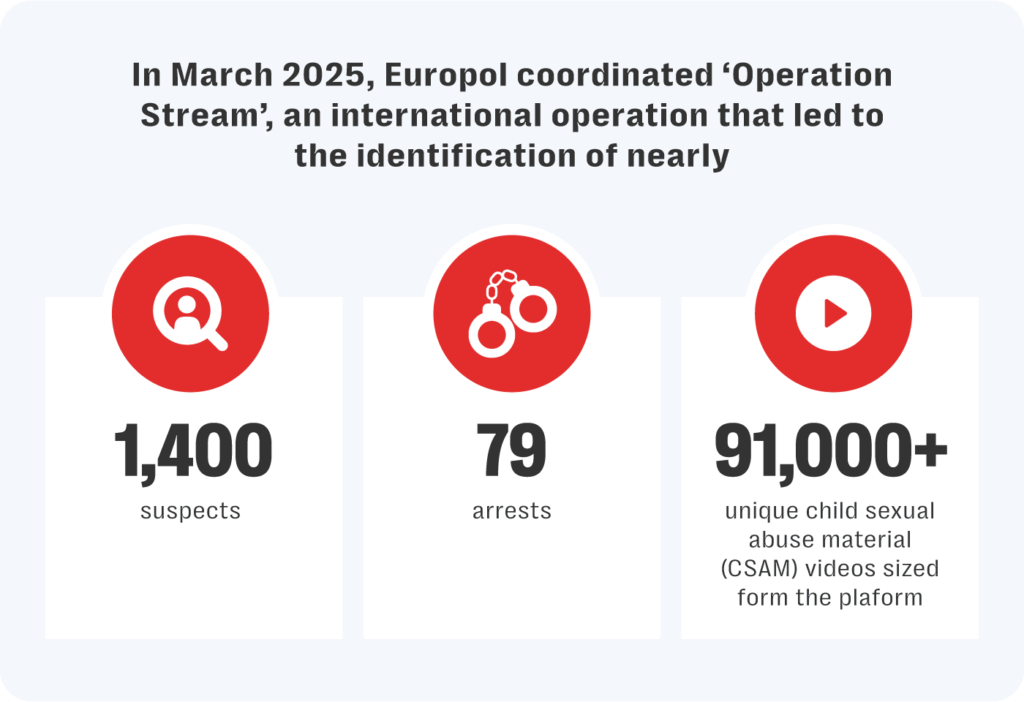

In March 2025, Europol coordinated ‘Operation Stream’, an international operation that led to the identification of nearly 1,400 suspects, 79 arrests, and the seizure of the platform hosting more that 91,000 unique child sexual abuse material (CSAM) videos.

This was a win for global law enforcement. But it was not a surprise.

Systemic Harms Requires Systemic Response

Kidflix utilized advanced technology to streamline the distribution of CSAM. The platform allowed users to stream and download videos, making it easier for offenders to consume and disseminate this harmful and illegal content.

Payments were made using a variety of online platforms including cryptocurrencies, decentralized finance platforms, Bitcoin ATMs and even centralized exchanges based in the US. The use of multiple platforms, and techniques used to obfuscate the source of the payments added a layer of anonymity and complexity to tracking and identifying users. This case exemplifies how everyday platforms can be exploited to perpetuate serious online harms.

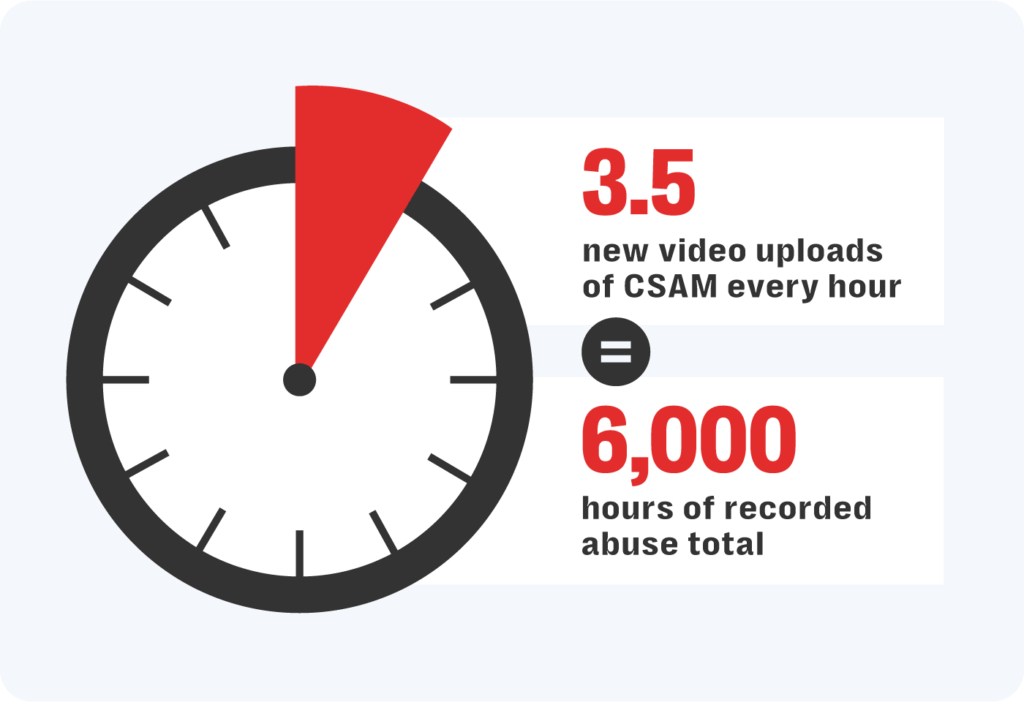

Kidflix is not an anomaly. It is the latest and largest example of how online infrastructure, whether purpose-built, or repurposed, can be exploited and weaponized to facilitate severe and ongoing abuse and re-traumatization. Kidflix’s existence and operation highlights the darker undercurrent of the internet. The platform offered streaming, downloading, and payment via cryptocurrency and reportedly averaged 3.5 new video uploads of CSAM every hour amounting to over 6,000 hours of recorded abuse. That scale isn’t just staggering – it’s systemic.

It also reflects the ease with which offenders can exploit platform infrastructure for criminal purposes – in this case, access and share CSAM online.

Technology isn’t Neutral – and Neither is Inaction

As technologies such as Generative AI become increasingly accessible, we’re seeing them weaponized to create synthetic CSAM, automate grooming behaviours, and mimic victims. Meanwhile, detection tools, escalation pathways, and enforcement mechanisms lag behind – often because safety systems aren’t designed until after harm has already scaled.

Rule 65 of 4Chan’s infamous “Rules of the Internet” state “If there isn’t, there will be.” In the context of online harm, we see a plethora of new services, platforms, and websites being created specifically for the market of predators.

This isn’t just a warning – it’s a challenge. The industry’s responsibility is to respond before the next Kidflix appears.

Behind Every File is a Life

These crimes are not abstract. Behind every image or video on Kidflix is a real child whose abuse is compounded each time that content is viewed or shared. While the takedown of Kidflix has led to arrests and child safeguarding interventions, these efforts address only a fraction of the broader landscape.

Every day, thousands of pieces of new CSAM, be they real or AI-generated, are shared online. Every day, new communities form for the purpose of exploiting children. And one day, in the not so far future, another criminal will create a new “Kidflix.”

While the shutdown of Kidflix is a significant victory, the fight against online child exploitation is ongoing. It takes a village to raise a child, and it will take all of us to keep them safe. We must – at all levels – collaborate much more proactively to understand and prevent the myriad of online harms facing online spaces, and its users.

Online abuse is persistent, shapeshifting, and deeply networked. So our response must be too.

Resolver’s Role in Prevention

At Resolver Trust & Safety, we’ve spent 20 years working with online platforms, marketplaces and governments to address the complex ecosystem of online harms.

Our work focuses on helping organisations design for safety at scale, whether building stronger internal policies, improving content and conduct moderation systems, supporting trust & safety teams, and coordinating critical response mechanisms across stakeholders.

The Kidflix case makes clear what many already know: This work can’t wait. Safety can’t be retrofitted. Stopping abuse isn’t just taking down platforms, it’s about ensuring they’re never viable in the first place.

Let’s Build What Comes Next

We don’t just need better takedowns. We need better infrastructure, enforcement pathways that work, detection systems that evolve, and teams empowered to act early. We need collaboration that extends beyond conferences.

If your organization is navigating these challenges, Resolver is here to help. Let’s build systems that make online spaces safer – for everyone.