The risk posed by mis- and disinformation is more complex than it may appear. What seems like an innocent conspiracy or minor departure from the truth can become a trigger for more dangerous narratives to take root through repeated exposure, driving radicalization toward manufactured scenarios.

The slow erosion of the online information environment leaves social media users exposed to increasingly problematic content. Over time, audiences may be conditioned to accept false claims over accurate ones. With more false information available than ever, the risk of harm driven by mis- and disinformation continues to grow.

This raises a critical question: Is simply removing this content the best course of action? And how should platform trust and safety efforts be structured to limit harm without creating new vulnerabilities?

Regulatory complexity and moderation gaps

Recent changes in trust and safety approaches towards content moderation combined with the implementation of the Online Safety Act (OSA) in the UK and Digital Service Act (DSA) in the EU, have left platforms navigating competing expectations around handling harmful false information. This leaves users exposed to mis- and disinformation while regulators and trust and safety teams reassess how best to limit the spread of misleading content.

This moment requires a clear, approachable methodology that provides an accessible view of verified facts — a foundation users and platforms can rely on when working to reduce harm caused by mis- and disinformation. Easily digestible factual information plays a critical role in helping trust and safety practitioners reduce risk.

Resolver’s Trust and Safety Intelligence work shows that individuals who intentionally spread harmful content adjust their tactics to evade content moderation. They may present falsehoods as opinion or satire or shift platforms when enforcement tightens.

Addressing limitations with moderation and regulation

Reductions in platform-centric enforcement in favor of community driven moderation can help platforms respond quickly to mis- and disinformation risks. But this approach carries concerns around bias, manipulation, scalability, privacy and volunteer burnout. Community-driven moderation can also be gamed. For example, Resolver analysts have observed users applying false community labels in attempts to remove lawful content.

Since coming into force in March 2025, the OSA has created a “false communications offense” for sending or posting information that is knowingly false with intent to cause non-trivial harm. This leaves large volumes of false information in a gray area regarding how platforms should enforce these rules.

According to a UNESCO study in 2024, two-thirds of influencers surveyed said they do not fact-check information before sharing it, instead relying on engagement metrics. While many expressed willingness to receive training on identifying mis- and disinformation, this highlights how significant amounts of content can evade moderation or regulatory action. Both intentional and unintentional spreaders of false information can exploit these gaps.

Resolver’s analysis shows that social media users who intentionally spread harmful content are acutely aware of platform policies. They adjust their behavior to evade detection, ensuring they retain large audiences. As a result, significant volumes of false content — both deliberate and accidental — slip through unless they meet precise enforcement criteria.

One approach is increasing access to high-quality information and targeted education to fill gaps in trust and safety policy or regulation. Emphasis on education — and putting the onus on users to spot misinformation — is a model adopted in Finland, which teaches media literacy as a core curriculum subject from the age of six. There is evidence to suggest that this is a positive, and certainly proactive approach. In 2023, Finland ranked highest in the European Media Literacy Index. While promising, there is limited research on whether this meaningfully reduces misinformation at scale.

How ease of access and understanding can influence the spread of mis-and disinformation

The COVID-19 pandemic showed that providing accurate information is not enough to stop disinformation. Even when governments and agencies communicated regularly, much of the information was complex, technical or difficult for the public to interpret. That created gaps where false narratives could spread.

This is especially true with political or medical topics, where accurate information is often presented in ways that are hard for non-experts to understand. Misunderstandings or confusion can then create ideal conditions for mis- and disinformation to take hold. As a result, many people turn to social media and influencers, who present information in a simpler and more accessible format. A recent Pew survey of news consumption habits in the US found that one in five Americans regularly get their news from influencers on social platforms.

Similarly, in 2024, just over half of UK internet users reported using social media as their primary news source. Other data shows that trust in influencers varies by topic. As many as 69% of people trust influencer product recommendations over those from brands, while only about 15% trust influencers in general.

Polling by Ipsos in 2024 reinforces this divide. Trust in influencers varies sharply by generation, with younger users more likely to believe influencer content. This data does not account for influencers who intentionally mislead their audiences through conspiracy theories, unverified claims or ideological disinformation. Even so, it suggests that as many as 8 million of the UK’s internet-using population may be susceptible to believing false information from influencers alone.

Historic cases of intentional disinformation spreaders show that deplatforming high-profile individuals can galvanize their audiences and drive them to alternative platforms with less oversight.

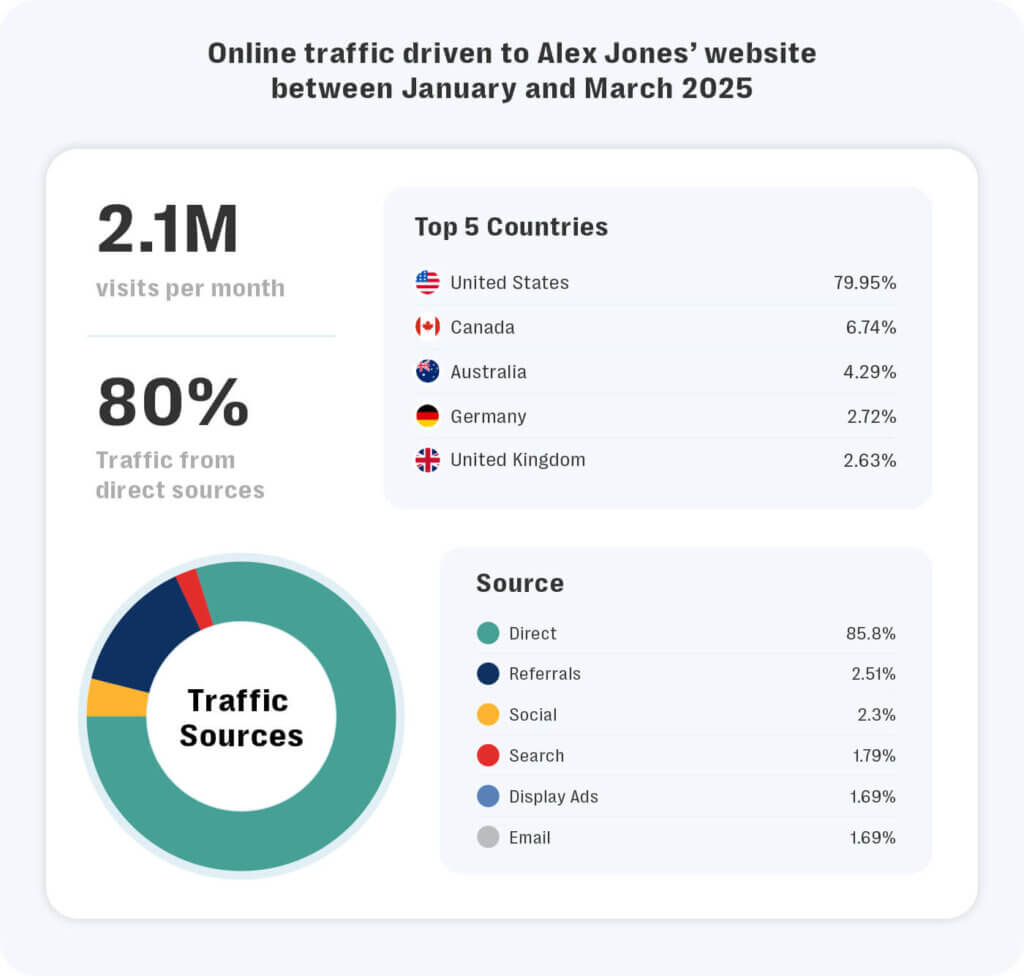

For example, when Alex Jones, the former owner of InfoWars, was removed from mainstream platforms, his already committed audience followed him to his new site, Banned.Video. There, he operates without platform oversight or content moderation, supported by the substantial following he built before his removal.

A Resolver review of traffic to Jones’s website between January and March 2025 found an average of 2.1 million monthly visits. Nearly 80% of this traffic came from direct searches, primarily from users in the United States, Australia, Germany, Canada and the United Kingdom. This pattern highlights a recurring risk: when disinformation moves off mainstream platforms, it often resurfaces in less moderated environments — where it can become more extreme and harder to monitor.

Trust and Safety Intelligence partners such as Resolver work with platforms, regulators and audiences to understand what mis- and disinformation looks like, how it is used to deceive and how to detect it day to day. This reduces the influence of disinformation over time and limits the harms that deception can drive.

What is the solution?

There is no single solution — and no single actor can address the problem alone. Platforms, regulators, trust and safety professionals, educators and communities all play a role in building resilience to disinformation.

It is increasingly clear that platforms alone cannot shoulder the responsibility of determining truth online. Given the complexity of safety and regulation, education and awareness are essential in countering false information.

Over the long term, information and digital literacy can help people understand how language can be manipulated, how statistics can be distorted, how images and videos can mislead and how historical narratives can be reframed or misrepresented. These skills can help inoculate social media users before they encounter false information at scale.

Resolver’s comprehensive Trust and Safety Intelligence helps some of the largest social media platforms and technology providers monitor and mitigate the spread of mis- and disinformation. To learn more about how our human-in-the-loop methodology blends automated detection with threat intelligence from a cross-disciplinary team of experts, please reach out.