Hate speech isn’t always obvious. In recent years, bad actors have increasingly exploited scientific studies, data, and language to spread racist and misogynist ideologies online. This tactic makes extremist content appear legitimate, allowing it to evade detection and spread on mainstream platforms.

As regulations like the Online Safety Act (OSA) in the UK and Digital Services Act (DSA) in the EU take effect, platforms face greater scrutiny over harmful content, even when it falls into a grey area. Resolver’s Trust & Safety experts continually work with platforms to identify the risks this form of hate speech can cause, as well as the real-world consequences for society as a whole.

Resolver’s Trust & Safety experts specialise in combining network intelligence with behavioural analysis by human experts to help platforms identify and mitigate these risks before they harm users or escalate into regulatory or reputational threats.

Scientific Language Used as Camouflage for Extremist Content

Since the 16th century, science has been misused within sections of western societies and academic institutions to justify racial supremacy, and in today’s digital landscape, this manipulation has been supercharged by social media. Bad actors exploit scientific studies to make extremist narratives appear legitimate, embedding these ideas into mainstream discourse and evading detection by trust and safety teams.

From the misrepresentation of scientific studies to the reformulation of debunked theories, the core principles have remained consistent. Social media platforms have provided those who promote these theories with a global audience. Being exploited by these bad actors and embedding them into the psyche of both willing and unwilling listeners. The use of scientific literature can be used as a tool to stoke tensions and stir up hatred both online and in society.

As regulations like the OSA and DSA come into force in the UK and the EU, social media platforms face increasing scrutiny. Identifying and mitigating this form of implicit hate speech is essential, not just for regulatory compliance, but to protect users and prevent reputational damage.

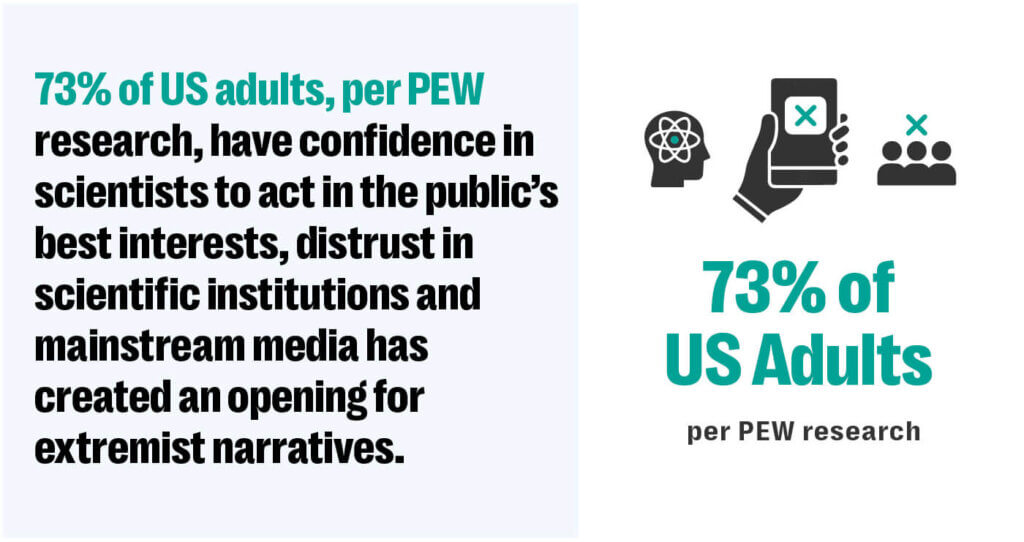

A key reason for the growth of these scientific theories in recent years is the increasing distrust and breakdown of mainstream media and traditional scientific outlets. A Pew Research survey of over 9,000 US adults in October 2024 found that while 73% of US adults still had confidence in scientists to act in the public’s best interests, distrust in scientific institutions and mainstream media has created an opening for extremist narratives. This allows mis-and disinformation to spread unchecked as users look to alternative science influencers for ‘real’ information.

By identifying the risks and actors who disseminate this content, Resolver can provide our clients with key actionable insights into how bad actors may exploit their platform and how to mitigate the risk of this content forming part of an individual’s radicalisation pathway. Some of the most egregious forms of scientific content being amplified across mainstream and alt-tech social platforms include:

Race Realism: The Pseudo-Scientific Justification for Racism

“Race Realism” is a term used to promote the idea that racial differences are biologically determined and scientifically validated. Proponents use academic-style language to make racist ideologies seem credible and avoid social media content moderation policies. This approach is intended to make their narratives more acceptable to mainstream audiences.

So called “Race Realists” have become increasingly vocal about their ideologies, framing traditional hateful narratives in the garb of unbiased scientific research to reduce the potential backlash. This tactic has proven extremely useful for those promoting ideas of racial supremacy or inferiority. It allows social media creators to discuss the topic openly, presenting it as scientific study rather than risking violations of social media platforms’ hate speech policies.

The dramatic rise in the accessibility of scientific literature has also allowed legitimate genetic studies to be exploited and misrepresented. This was seen with devastating clarity following the white supremacist-inspired mass shooting in Buffalo, New York, in 2022, when the perpetrator included a figure from a paper published in a leading journal in their 180 page manifesto.

American Renaissance: Mainstreaming Race Realism

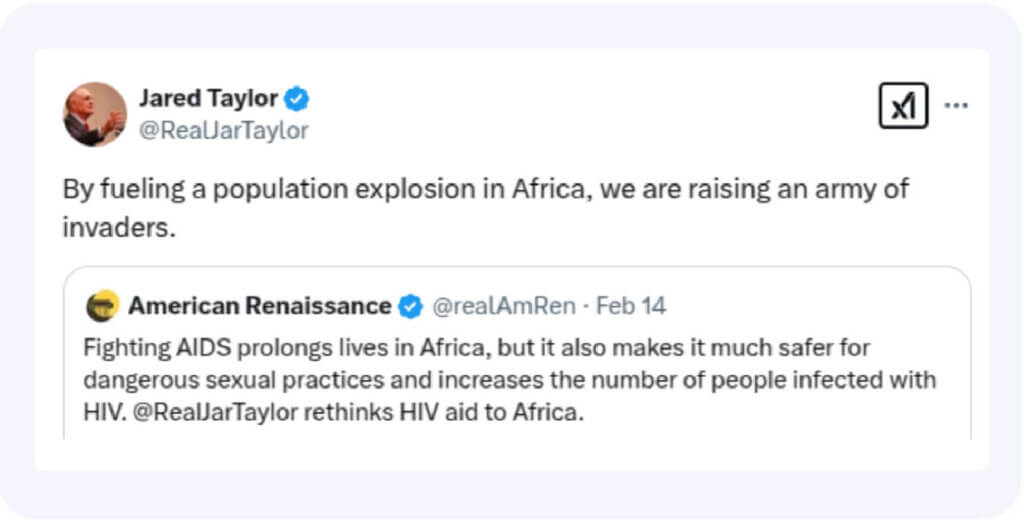

One key figure in the rise of race realism content across social platforms in the US and Europe is Jared Taylor, founder of American Renaissance, a think tank that promotes race realism and white identity. Taylor presents race realism as a crucial aspect required for societal understanding, claiming it is necessary to better accommodate societal needs.

Despite these idealistic claims, Taylor frequently uses xenophobic language to promote the theory of racial inferiority, particularly targeting African and South Asian populations. Due to his academic style of delivery, he is regularly featured as a guest on podcasts and online shows that are shared across both mainstream and alt-tech platforms to discuss these topics. By appearing on popular shows, Taylor can effectively circumvent moderation attempts and maintain a mainstream social media presence.

Post shared by Taylor linking longer life expectancy in Africa to increased migration.

The ability for Taylor and similar banned figures to share their narratives on popular social media channels with large audiences poses a significant challenge to social media platforms and the companies that advertise on them. Reputational damage through media reporting, as recent investigations by UK advocacy group Hope Not Hate, into the forces funding and driving these theories have highlighted their growing absorption on mainstream platforms.

Why is scientific racism a problem for social platforms?

- Disguised Hate Speech: Presenting racism as ‘scientific debate’ allows it to spread on mainstream platforms

![]()

- Exploitation of Scientific Studies: Research is ‘cherry-picked’ or intentionally misrepresented to support racist narratives

![]()

- Regulatory & Reputational Risks: Platforms hosting this content may face scrutiny from governments, legislators, advocacy groups, and advertisers.

How can Resolver Trust and Safety Intelligence help combat this threat?

![]()

- Advanced risk intelligence to detect and track narratives before they escalate

![]()

- Swift Identification of key figures, actors, and content trends using behavioural intelligence and data science to help platforms proactively identify malicious networks and adjust policies

![]()

- Regulatory compliance insights to help platforms stay-up-to-date on the latest tactics, techniques and procedures used by such users to evade moderation. This helps Trust and Safety teams navigate the complexities of new hate speech legislation

Incels & Looksmaxxing: Science Used to Justify Misogyny

The incel (involuntary celibates) community is vast and multifaceted. It weaponises scientific data to justify misogynistic beliefs, often citing research out of context to ‘prove’ male superiority or female hypergamy. The language of this community has been widely adopted into mainstream culture, to the extent that the origins of these terms are often unknown to mainstream users.

This presents a problem for platforms that must remove hateful content promoting violence towards women and misogynistic narratives while allowing non-violative content using this language to be shared. Incel forums feature hundreds of research articles sourced from major scientific papers and universities. Studies are cherry picked to justify beliefs and explain why women in particular are to blame for their misogynistic ideology.

The majority of those within incel communities are unlikely to commit acts of real-world violence. However, those engaging with and creating this type of content, combined with additional vulnerability factors and circumstantial indicators pose direct risk of real-world harm. Faced with this supposedly overwhelming evidence, vulnerable individuals may be led to believe there is no alternative but to subscribe to the community they have been exposed to.

Like many other forms of radicalisation, exposure to and engagement with online content is key to this behavior. The exploitation of scientific studies is just one way this online content can contribute to the risk of the radicalisation journey.

Looksmaxxing: From Grooming Tips to Harmful Body Modifications

A dangerous offshoot, Looksmaxxing, pushes users towards extreme self-improvement practices, from harmless grooming tips such as skin routines and exercise to dangerous procedures like steroid abuse and bone-smashing. Looksmaxxing advocates predominantly aim to increase their “Sexual Market Value” which is a score based on a calculation of specific criteria. The promotion of Looksmaxxing has been built on debunked and discredited science by some individuals aiming to monetise the surge in popularity.

Excerpt of a thread from an incel forum linking a study supporting scientific justification for male superiority.

A search for looksmaxxing on social media platforms returns thousands of results with content regularly promoting links to paid for guides filled with sensationalist claims. The ability to gain commercially from the creation and amplification of incel related content can also incentivize content creators to produce such instructional content that promotes harmful body modifications or unproven alternative treatments to their viewers. This trend is likely to pose greater issues in moderating incel content, particularly those with creator programs. Should “incel influencers” be discovered to be directly profiting through promotion of this content, platforms could risk significant public backlash and compliance issues.

Because of Looksmaxxing origins, the connections to the Incel community are dangerously apparent. Suggested content after viewing looksmaxxing posts frequently includes more explicit Incel narratives. This exposure highlights the risk of mainstream adoption of terminology originating from Incel communities directing users back to its origins in hateful communities.

Why is incel content a problem for social platforms?

![]()

- Radicalisation Pathways: Content promoting Looksmaxxing often leads users toward deeper incel ideology

![]()

- Monetisation & Harmful Influence: Users are pushed towards paid guides, courses, and extreme body modification trends

![]()

- Challenges in Moderation: Looksmaxxing itself isn’t inherently harmful, making it difficult to moderate and track without clear violations

How can Resolver Trust and Safety Intelligence help combat this threat?

![]()

- Identifying gateway content that leads users towards radicalisation

![]()

- Tracking monetised harmful content to help platforms disrupt revenue streams from accounts amplifying violative content

![]()

- Early warning systems to alert platforms to emerging trends and techniques used by bad actors to skirt moderation before they gain traction

Combating spread of scientific racism with Resolver

Hate speech disguised as academic discourse presents a unique challenge for social media platforms. Unlike explicit racism or misogyny, this content often falls into the ‘grey’ area – not always violating guidelines, yet still fostering an environment where views and hateful stereotypes can flourish. With the introduction of more stringent legislation, calls by public bodies for social media platforms to do more to address this nuanced hate speech have grown louder. Increasingly platforms will have to take action to avoid regulatory fines, user harm and radicalisation, and reputational damage.

Without addressing this harm, it’s likely that due to the nuanced nature of the content under current social media platforms guidelines these narratives will persist on mainstream social media. The identification and reporting of figures who push these narratives is crucial to social media platforms being able to mitigate the risk this content can pose. It may be the case that a platform’s current hate speech policies don’t cover an emerging harmful narrative or that they aren’t able to act on it.

Resolver’s Trust and Safety team continually monitors for “non-violative risks”, which give crucial insights to our partners on content and trends that don’t explicitly violate their guidelines but can pose a significant risk in terms of media scrutiny or harm to users. This intelligence can provide vital insights and enable trust and safety teams to take preemptive measures or make policy updates to ensure harmful content can be actioned.

In either case, Resolver’s 20 year experience in Trust & Safety Intelligence service can provide the insight needed to make those decisions, and mitigate the risk of unknown unknown risks that can cause significant reputational damage.