If you lead a security operations or investigations team, you can’t scroll LinkedIn without bumping into the AI hype. It sounds promising, especially when your security team is short-staffed or juggling multiple sites. But when a vague report hits your inbox after-hours, the buzz won’t save you.

To make an impact, AI-powered incident management solutions have to work the way security teams actually operate.

If you’ve used tools like ChatGPT or Copilot, you know they’re fast at writing and summarizing text. They serve their purpose, but they weren’t built for high-stakes security environments. They won’t flag a weapon or assess urgency. They won’t auto-launch a playbook tailored to the incident’s severity, department, or location. Your screener still has to fill in the blanks and move the report forward. Sometimes, that means printing it and pushing a piece of paper across a desk.

Now imagine a report comes in after-hours through a static form or hotline submission: “There’s been a theft. I saw a man pull a knife and threaten a staff member. I think it was Josh Lee.” No subject line, location, or context. That’s pretty common when people are rushed or shaken. They say what they saw and expect the system to handle the rest and take it to the appropriate person.

Teams dealing with incident intake every day need support that actually fits the job. I’ve managed investigations and security teams long enough to know this pain isn’t theoretical. The admin grind, vague reports, and missed context slow everything down. That’s why I started building tools that actually work for people in the field.

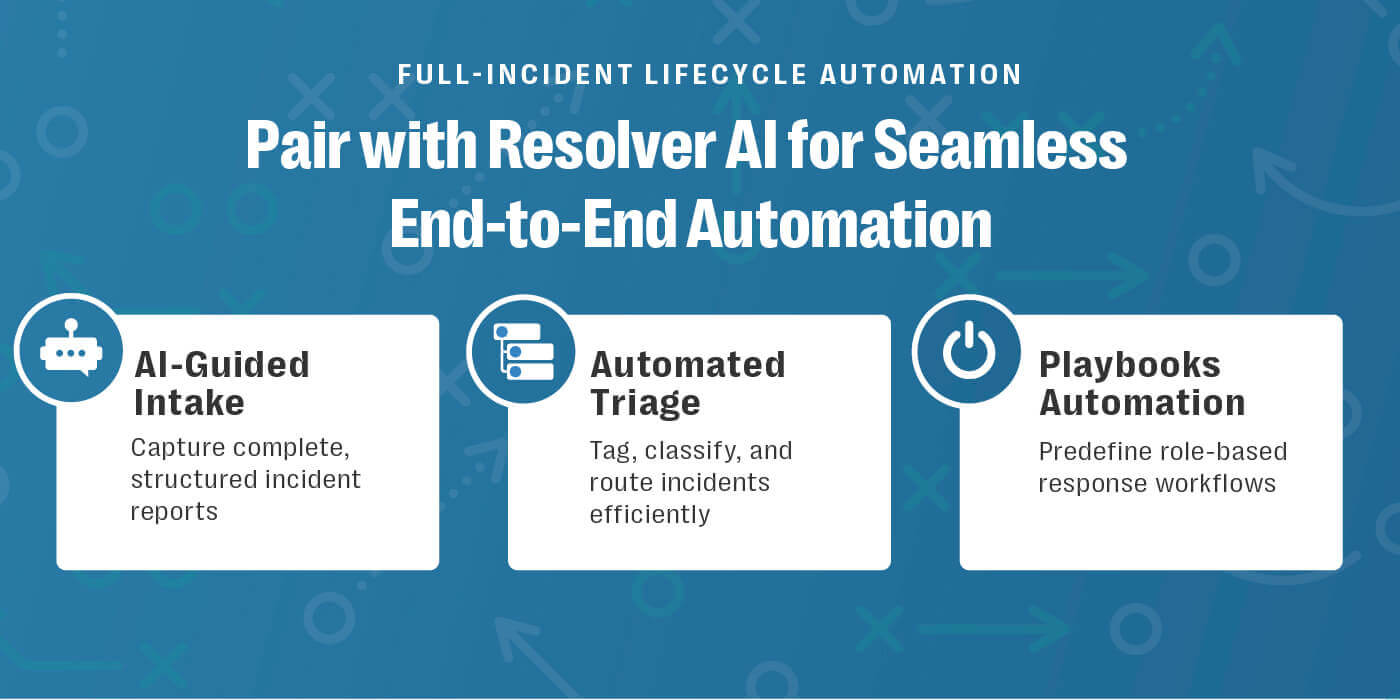

AI for security teams can’t bolt onto existing solutions. It needs to fit your workflow and give full visibility every step of the way. It must support human judgment and your team’s expertise, and you have to be able to trust it. When done right, AI-powered incident management guides every report submission, prompting for what’s missing. It triages and routes every report to the right team quickly. And it needs to work under pressure, every shift, no matter who’s on.

So that’s what we set out to build.

Why manual incident intake slows down security teams

No security leader signs up to spend hours sorting inboxes. But that’s the reality. As one Resolver customer put it, “The real risk is buried in vague reports. Our team ends up playing detective just to tag the basics.”

Corporate security teams face a flood of reports, from hotline tips, inbox messages, anonymous portal entries. Most aren’t complete enough to act on. That leads to wasted time chasing details, tagging locations, and tracking down who needs to see what. I call this bad admin and it adds up to hours lost each shift. Without automation that works for you, your investigative team is likely still:

- Chasing missing information

- Tagging threats, names, and locations

- Forwarding reports to the right team

- Checking which SOP applies (and where the playbook lives)

This busywork drains capacity and lets real risks stack up. It’s why nearly 70% of leaders in a 2025 Resolver-ASIS survey put automation at the top of their investment list.

The problem typically isn’t report volume. It’s the lack of a system that brings structure, context, and speed to the very important work you were hired to do. Legacy tools, traditionally built for IT or HR tickets, don’t fit the realities of physical and corporate security. Your analysts still have to handle the admin by chasing.

Modern incident management automation, built right into a centralized, purpose-built system, takes things to the next level. It prompts for what’s missing, flags what matters, and routes cases without delay. When you bring order to intake and triage, you give teams time back, and let them focus on real investigations.

What does AI-powered incident management automation look like in a security context?

Ask any group of security pros about writing incident reports and you’ll hear the same frustrations. Traditional triage methods slow investigations when reports come in vague, incomplete, or missing key details. That’s where AI-powered intake and triage make a meaningful difference. It’s designed to guide the reporter, prompt for what’s missing in the report, flag risks like weapons or injuries, and make sure every submission includes the right detail, context, and consistency.

What does this mean for your team?

- Guided intake. A conversational assistant prompts for the right details, so nothing gets missed.

- More complete reports. Fewer gaps, fewer vague entries.

- Instant routing. The system sends each report to the right team, based on incident type, severity, or location.

- Consistent, structured response. Even if the original report is a mess, your process stays organized.

- Less “bad admin.” Your team can focus on investigations, not inbox triage.

When that admin load comes off your team’s plate, people spend more time investigating trends, and less time chasing missing info. And when those insights land on your desk in a structured report, it’s easier to make the case for increasing budget with leadership.

Teams that previewed Automated Intake and Triage reported a 50 to 80 percent drop in follow-ups during intake. That’s a real improvement that means less delay and more time for investigators to act.

AI that lightens the admin load, not the security team

It’s important to be clear: Resolver’s AI-powered incident management solution doesn’t replace your team’s real-world judgment. This isn’t generic AI that tries to run your operation or make final calls. We designed it to run within your existing incident workflows, follow your rules, and create a complete, auditable record from the first report onward. Whether it’s a seasoned investigator or a new hire on call, the system prompts for every detail and follows your process from start to finish.

Your analysts and security teams weren’t hired to copy/paste or tag fields. They were hired to investigate, assess risk, connect the dots, respond and report. AI-powered incident management gives them time back, so they can focus on delivering strategic insights and better outcomes, more consistently. It also reduces the risk of missing information that legal or leadership might question later.

Whether the report comes in at 2 p.m. or 2 a.m., the process is the same; complete, accurate, fast and transparent. Our automated intake and triage was designed to give you consistency when you need it most, be that under pressure, across shifts, and across teams.

That’s what AI should do: get the busywork out of the way, so your people can do their jobs better and make in impact.

What gets automated (and what stays in your control)

Resolver’s intake assistant doesn’t guess. It follows the protocols you set, from your classifications and your routing rules to your SOPs. The system guides the report, tags key details, launches the right Playbook. And it logs every step.

If something feels off, your team can reroute, reclassify, or adjust it at any point. The automation speeds up admin, but your people always stay in control.

Automated Intake and Triage isn’t about replacing people. It’s about protecting them and giving your teams best-in-class tools to act fast, document clearly, and control risk. That’s the difference between summarizing a problem and solving one.

Want to see how Resolver’s triage assistant works in real security workflows? See it in action here.

About the author: Artem Sherman is the Division Head of Security & Investigations at Resolver, with more than 20 years of experience in corporate security leadership. A former practitioner, he now focuses on building tools that cut administrative drag and help teams respond faster. Artem is known for delivering practical strategies that improve efficiency, strengthen investigations, and drive real operational outcomes.

AI-Powered Automated Intake and Triage: Frequently Asked Questions

1. What is AI-powered automated intake and triage?

AI-powered automated intake and triage uses artificial intelligence to organize, categorize, and route security data, helping teams streamline their workflows and reduce manual admin tasks.

2. Is this just ChatGPT for security?

No. This system uses domain-specific AI focused on structuring data and launching workflows—not generating text or stories.

3. Will this replace my security analysts?

No. The AI automates routine admin tasks, allowing analysts to focus on investigation, risk assessment, and effective response.

4. Is the system configurable to our environment?

Yes. You can define your own workflows, classifications, and SOPs. The AI operates within your structure and adapts to your collection goals.

5. Does it work in the field?

Yes. The system supports mobile use, offline access, and multi-language inputs, making it practical for field operations.

6. Can we track what the AI does?

Yes. Every decision, tag, and routing action is logged for full transparency. Your team remains in control and can review every step.

7. What happens if the AI makes a mistake?

Your team always has the final say. Reports can be reclassified, rerouted, or adjusted as needed. Automation speeds up admin, not judgment.